56 KiB

Auto DevOps

Introduced in GitLab 10.0. Generally available on GitLab 11.0.

Auto DevOps provides pre-defined CI/CD configuration which allows you to automatically detect, build, test, deploy, and monitor your applications. Leveraging CI/CD best practices and tools, Auto DevOps aims to simplify the setup and execution of a mature & modern software development lifecycle.

Overview

NOTE: Enabled by default: Starting with GitLab 11.3, the Auto DevOps pipeline is enabled by default for all projects. If it has not been explicitly enabled for the project, Auto DevOps will be automatically disabled on the first pipeline failure. Your project will continue to use an alternative CI/CD configuration file if one is found. A GitLab administrator can change this setting in the admin area.

With Auto DevOps, the software development process becomes easier to set up as every project can have a complete workflow from verification to monitoring with minimal configuration. Just push your code and GitLab takes care of everything else. This makes it easier to start new projects and brings consistency to how applications are set up throughout a company.

Quick start

If you are using GitLab.com, see the quick start guide for how to use Auto DevOps with GitLab.com and a Kubernetes cluster on Google Kubernetes Engine (GKE).

If you are using a self-hosted instance of GitLab, you will need to configure the Google OAuth2 OmniAuth Provider before you can configure a cluster on GKE. Once this is set up, you can follow the steps on the quick start guide to get started.

Comparison to application platforms and PaaS

Auto DevOps provides functionality that is often included in an application platform or a Platform as a Service (PaaS). It takes inspiration from the innovative work done by Heroku and goes beyond it in multiple ways:

- Auto DevOps works with any Kubernetes cluster; you're not limited to running on GitLab's infrastructure. (Note that many features also work without Kubernetes.)

- There is no additional cost (no markup on the infrastructure costs), and you can use a self-hosted Kubernetes cluster or Containers as a Service on any public cloud (for example, Google Kubernetes Engine).

- Auto DevOps has more features including security testing, performance testing, and code quality testing.

- Auto DevOps offers an incremental graduation path. If you need advanced customizations, you can start modifying the templates without having to start over on a completely different platform. Review the customizing section for more information.

Features

Comprised of a set of stages, Auto DevOps brings these best practices to your project in a simple and automatic way:

- Auto Build

- Auto Test

- Auto Code Quality [STARTER]

- Auto SAST (Static Application Security Testing) [ULTIMATE]

- Auto Dependency Scanning [ULTIMATE]

- Auto License Management [ULTIMATE]

- Auto Container Scanning

- Auto Review Apps

- Auto DAST (Dynamic Application Security Testing) [ULTIMATE]

- Auto Deploy

- Auto Browser Performance Testing [PREMIUM]

- Auto Monitoring

As Auto DevOps relies on many different components, it's good to have a basic knowledge of the following:

Auto DevOps provides great defaults for all the stages; you can, however, customize almost everything to your needs.

For an overview on the creation of Auto DevOps, read the blog post From 2/3 of the Self-Hosted Git Market, to the Next-Generation CI System, to Auto DevOps.

Requirements

To make full use of Auto DevOps, you will need:

- GitLab Runner (needed for all stages) - Your Runner needs to be configured to be able to run Docker. Generally this means using the Docker or Kubernetes executor, with privileged mode enabled. The Runners do not need to be installed in the Kubernetes cluster, but the Kubernetes executor is easy to use and is automatically autoscaling. Docker-based Runners can be configured to autoscale as well, using Docker Machine. Runners should be registered as shared Runners for the entire GitLab instance, or specific Runners that are assigned to specific projects.

- Base domain (needed for Auto Review Apps and Auto Deploy) - You will need a domain configured with wildcard DNS which is going to be used by all of your Auto DevOps applications. Read the specifics.

- Kubernetes (needed for Auto Review Apps, Auto Deploy, and Auto Monitoring) -

To enable deployments, you will need Kubernetes 1.5+. You need a Kubernetes cluster

for the project, or a Kubernetes default service template

for the entire GitLab installation.

- A load balancer - You can use NGINX ingress by deploying it to your

Kubernetes cluster using the

nginx-ingressHelm chart.

- A load balancer - You can use NGINX ingress by deploying it to your

Kubernetes cluster using the

- Prometheus (needed for Auto Monitoring) - To enable Auto Monitoring, you will need Prometheus installed somewhere (inside or outside your cluster) and configured to scrape your Kubernetes cluster. To get response metrics (in addition to system metrics), you need to configure Prometheus to monitor NGINX. The Prometheus service integration needs to be enabled for the project, or enabled as a default service template for the entire GitLab installation.

NOTE: Note: If you do not have Kubernetes or Prometheus installed, then Auto Review Apps, Auto Deploy, and Auto Monitoring will be silently skipped.

Auto DevOps base domain

NOTE: Note

AUTO_DEVOPS_DOMAIN environment variable is deprecated and

is scheduled to be removed.

The Auto DevOps base domain is required if you want to make use of Auto Review Apps and Auto Deploy. It can be defined in any of the following places:

- either under the cluster's settings, whether for projects or groups

- or in instance-wide settings in the admin area > Settings under the "Continuous Integration and Delivery" section

- or at the project level as a variable:

KUBE_INGRESS_BASE_DOMAIN - or at the group level as a variable:

KUBE_INGRESS_BASE_DOMAIN.

NOTE: Note

The Auto DevOps base domain variable (KUBE_INGRESS_BASE_DOMAIN) follows the same order of precedence

as other environment variables.

A wildcard DNS A record matching the base domain(s) is required, for example,

given a base domain of example.com, you'd need a DNS entry like:

*.example.com 3600 A 1.2.3.4

In this case, example.com is the domain name under which the deployed apps will be served,

and 1.2.3.4 is the IP address of your load balancer; generally NGINX

(see requirements). How to set up the DNS record is beyond

the scope of this document; you should check with your DNS provider.

Alternatively you can use free public services like nip.io

which provide automatic wildcard DNS without any configuration. Just set the

Auto DevOps base domain to 1.2.3.4.nip.io.

Once set up, all requests will hit the load balancer, which in turn will route them to the Kubernetes pods that run your application(s).

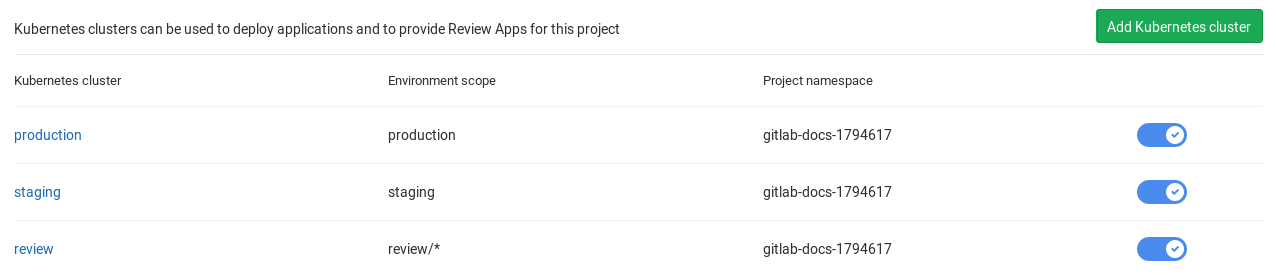

Using multiple Kubernetes clusters [PREMIUM]

When using Auto DevOps, you may want to deploy different environments to different Kubernetes clusters. This is possible due to the 1:1 connection that exists between them.

In the Auto DevOps template (used behind the scenes by Auto DevOps), there are currently 3 defined environment names that you need to know:

review/(every environment starting withreview/)stagingproduction

Those environments are tied to jobs that use Auto Deploy, so

except for the environment scope, they would also need to have a different

domain they would be deployed to. This is why you need to define a separate

KUBE_INGRESS_BASE_DOMAIN variable for all the above

based on the environment.

The following table is an example of how the three different clusters would be configured.

| Cluster name | Cluster environment scope | KUBE_INGRESS_BASE_DOMAIN variable value |

Variable environment scope | Notes |

|---|---|---|---|---|

| review | review/* |

review.example.com |

review/* |

The review cluster which will run all Review Apps. * is a wildcard, which means it will be used by every environment name starting with review/. |

| staging | staging |

staging.example.com |

staging |

(Optional) The staging cluster which will run the deployments of the staging environments. You need to enable it first. |

| production | production |

example.com |

production |

The production cluster which will run the deployments of the production environment. You can use incremental rollouts. |

To add a different cluster for each environment:

-

Navigate to your project's Operations > Kubernetes and create the Kubernetes clusters with their respective environment scope as described from the table above.

-

After the clusters are created, navigate to each one and install Helm Tiller and Ingress. Wait for the Ingress IP address to be assigned.

-

Make sure you have configured your DNS with the specified Auto DevOps domains.

-

Navigate to each cluster's page, through Operations > Kubernetes, and add the domain based on its Ingress IP address.

Now that all is configured, you can test your setup by creating a merge request

and verifying that your app is deployed as a review app in the Kubernetes

cluster with the review/* environment scope. Similarly, you can check the

other environments.

NOTE: Note:

From GitLab 11.8, KUBE_INGRESS_BASE_DOMAIN replaces AUTO_DEVOPS_DOMAIN.

AUTO_DEVOPS_DOMAIN is scheduled to be removed.

Enabling/Disabling Auto DevOps

When first using Auto Devops, review the requirements to ensure all necessary components to make full use of Auto DevOps are available. If this is your fist time, we recommend you follow the quick start guide.

GitLab.com users can enable/disable Auto DevOps at the project-level only. Self-managed users can enable/disable Auto DevOps at either the project-level or instance-level.

Enabling/disabling Auto DevOps at the instance-level (Administrators only)

- Go to Admin area > Settings > Continuous Integration and Deployment.

- Toggle the checkbox labeled Default to Auto DevOps pipeline for all projects.

- If enabling, optionally set up the Auto DevOps base domain which will be used for Auto Deploy and Auto Review Apps.

- Click Save changes for the changes to take effect.

NOTE: Note: Even when disabled at the instance level, group owners and project maintainers are still able to enable Auto DevOps at group-level and project-level, respectively.

Enabling/disabling Auto DevOps at the group-level

Introduced in GitLab 11.10.

To enable or disable Auto DevOps at the group-level:

- Go to group's Settings > CI/CD > Auto DevOps page.

- Toggle the Default to Auto DevOps pipeline checkbox (checked to enable, unchecked to disable).

- Click Save changes button for the changes to take effect.

When enabling or disabling Auto DevOps at group-level, group configuration will be implicitly used for the subgroups and projects inside that group, unless Auto DevOps is specifically enabled or disabled on the subgroup or project.

NOTE: Note Only administrators and group owners are allowed to enable or disable Auto DevOps at group-level.

Enabling/disabling Auto DevOps at the project-level

If enabling, check that your project doesn't have a .gitlab-ci.yml, or if one exists, remove it.

- Go to your project's Settings > CI/CD > Auto DevOps.

- Toggle the Default to Auto DevOps pipeline checkbox (checked to enable, unchecked to disable)

- When enabling, it's optional but recommended to add in the base domain that will be used by Auto DevOps to deploy your application and choose the deployment strategy.

- Click Save changes for the changes to take effect.

When the feature has been enabled, an Auto DevOps pipeline is triggered on the default branch.

NOTE: Note:

For GitLab versions 10.0 - 10.2, when enabling Auto DevOps, a pipeline needs to be

manually triggered either by pushing a new commit to the repository or by visiting

https://example.gitlab.com/<username>/<project>/pipelines/new and creating

a new pipeline for your default branch, generally master.

NOTE: Note:

There is also a feature flag to enable Auto DevOps to a percentage of projects

which can be enabled from the console with

Feature.get(:force_autodevops_on_by_default).enable_percentage_of_actors(10).

Deployment strategy

Introduced in GitLab 11.0.

You can change the deployment strategy used by Auto DevOps by going to your project's Settings > CI/CD > Auto DevOps.

The available options are:

-

Continuous deployment to production: Enables Auto Deploy with

masterbranch directly deployed to production. -

Continuous deployment to production using timed incremental rollout: Sets the

INCREMENTAL_ROLLOUT_MODEvariable totimed, and production deployment will be executed with a 5 minute delay between each increment in rollout. -

Automatic deployment to staging, manual deployment to production: Sets the

STAGING_ENABLEDandINCREMENTAL_ROLLOUT_MODEvariables to1andmanual. This means:masterbranch is directly deployed to staging.- Manual actions are provided for incremental rollout to production.

Stages of Auto DevOps

The following sections describe the stages of Auto DevOps. Read them carefully to understand how each one works.

Auto Build

Auto Build creates a build of the application using an existing Dockerfile or

Heroku buildpacks.

Either way, the resulting Docker image is automatically pushed to the Container Registry and tagged with the commit SHA or tag.

Auto Build using a Dockerfile

If a project's repository contains a Dockerfile, Auto Build will use

docker build to create a Docker image.

If you are also using Auto Review Apps and Auto Deploy and choose to provide

your own Dockerfile, make sure you expose your application to port

5000 as this is the port assumed by the default Helm chart.

Auto Build using Heroku buildpacks

Auto Build builds an application using a project's Dockerfile if present, or

otherwise it will use Herokuish

and Heroku buildpacks

to automatically detect and build the application into a Docker image.

Each buildpack requires certain files to be in your project's repository for Auto Build to successfully build your application. For example, the following files are required at the root of your application's repository, depending on the language:

- A

Pipfileorrequirements.txtfile for Python projects. - A

GemfileorGemfile.lockfile for Ruby projects.

For the requirements of other languages and frameworks, read the buildpacks docs.

TIP: Tip:

If Auto Build fails despite the project meeting the buildpack requirements, set

a project variable TRACE=true to enable verbose logging, which may help to

troubleshoot.

Auto Test

Auto Test automatically runs the appropriate tests for your application using Herokuish and Heroku buildpacks by analyzing your project to detect the language and framework. Several languages and frameworks are detected automatically, but if your language is not detected, you may succeed with a custom buildpack. Check the currently supported languages.

NOTE: Note: Auto Test uses tests you already have in your application. If there are no tests, it's up to you to add them.

Auto Code Quality [STARTER]

Auto Code Quality uses the Code Quality image to run static analysis and other code checks on the current code. The report is created, and is uploaded as an artifact which you can later download and check out.

Any differences between the source and target branches are also shown in the merge request widget.

Auto SAST [ULTIMATE]

Introduced in GitLab Ultimate 10.3.

Static Application Security Testing (SAST) uses the SAST Docker image to run static analysis on the current code and checks for potential security issues. Once the report is created, it's uploaded as an artifact which you can later download and check out.

Any security warnings are also shown in the merge request widget. Read more how SAST works.

NOTE: Note: The Auto SAST stage will be skipped on licenses other than Ultimate.

NOTE: Note: The Auto SAST job requires GitLab Runner 11.5 or above.

Auto Dependency Scanning [ULTIMATE]

Introduced in GitLab Ultimate 10.7.

Dependency Scanning uses the Dependency Scanning Docker image to run analysis on the project dependencies and checks for potential security issues. Once the report is created, it's uploaded as an artifact which you can later download and check out.

Any security warnings are also shown in the merge request widget. Read more about Dependency Scanning.

NOTE: Note: The Auto Dependency Scanning stage will be skipped on licenses other than Ultimate.

NOTE: Note: The Auto Dependency Scanning job requires GitLab Runner 11.5 or above.

Auto License Management [ULTIMATE]

Introduced in GitLab Ultimate 11.0.

License Management uses the License Management Docker image to search the project dependencies for their license. Once the report is created, it's uploaded as an artifact which you can later download and check out.

Any licenses are also shown in the merge request widget. Read more how License Management works.

NOTE: Note: The Auto License Management stage will be skipped on licenses other than Ultimate.

Auto Container Scanning

Introduced in GitLab 10.4.

Vulnerability Static Analysis for containers uses Clair to run static analysis on a Docker image and checks for potential security issues. Once the report is created, it's uploaded as an artifact which you can later download and check out.

Any security warnings are also shown in the merge request widget. Read more how Container Scanning works.

NOTE: Note: The Auto Container Scanning stage will be skipped on licenses other than Ultimate.

Auto Review Apps

NOTE: Note: This is an optional step, since many projects do not have a Kubernetes cluster available. If the requirements are not met, the job will silently be skipped.

Review Apps are temporary application environments based on the branch's code so developers, designers, QA, product managers, and other reviewers can actually see and interact with code changes as part of the review process. Auto Review Apps create a Review App for each branch.

Auto Review Apps will deploy your app to your Kubernetes cluster only. When no cluster is available, no deployment will occur.

The Review App will have a unique URL based on the project ID, the branch or tag

name, and a unique number, combined with the Auto DevOps base domain. For

example, 13083-review-project-branch-123456.example.com. A link to the Review App shows

up in the merge request widget for easy discovery. When the branch or tag is deleted,

for example after the merge request is merged, the Review App will automatically

be deleted.

Review apps are deployed using the auto-deploy-app chart with Helm. The app will be deployed into the Kubernetes namespace for the environment.

Since GitLab 11.4, a local Tiller is used. Previous versions of GitLab had a Tiller installed in the project namespace.

CAUTION: Caution: Your apps should not be manipulated outside of Helm (using Kubernetes directly). This can cause confusion with Helm not detecting the change and subsequent deploys with Auto DevOps can undo your changes. Also, if you change something and want to undo it by deploying again, Helm may not detect that anything changed in the first place, and thus not realize that it needs to re-apply the old config.

Auto DAST [ULTIMATE]

Introduced in GitLab Ultimate 10.4.

Dynamic Application Security Testing (DAST) uses the popular open source tool OWASP ZAProxy to perform an analysis on the current code and checks for potential security issues. Once the report is created, it's uploaded as an artifact which you can later download and check out.

Any security warnings are also shown in the merge request widget. Read how DAST works.

NOTE: Note: The Auto DAST stage will be skipped on licenses other than Ultimate.

Auto Browser Performance Testing [PREMIUM]

Introduced in GitLab Premium 10.4.

Auto Browser Performance Testing utilizes the Sitespeed.io container to measure the performance of a web page. A JSON report is created and uploaded as an artifact, which includes the overall performance score for each page. By default, the root page of Review and Production environments will be tested. If you would like to add additional URL's to test, simply add the paths to a file named .gitlab-urls.txt in the root directory, one per line. For example:

/

/features

/direction

Any performance differences between the source and target branches are also shown in the merge request widget.

Auto Deploy

NOTE: Note: This is an optional step, since many projects do not have a Kubernetes cluster available. If the requirements are not met, the job will silently be skipped.

After a branch or merge request is merged into the project's default branch (usually

master), Auto Deploy deploys the application to a production environment in

the Kubernetes cluster, with a namespace based on the project name and unique

project ID, for example project-4321.

Auto Deploy doesn't include deployments to staging or canary by default, but the Auto DevOps template contains job definitions for these tasks if you want to enable them.

You can make use of environment variables to automatically scale your pod replicas.

Apps are deployed using the auto-deploy-app chart with Helm. The app will be deployed into the Kubernetes namespace for the environment.

Since GitLab 11.4, a local Tiller is used. Previous versions of GitLab had a Tiller installed in the project namespace.

CAUTION: Caution: Your apps should not be manipulated outside of Helm (using Kubernetes directly). This can cause confusion with Helm not detecting the change and subsequent deploys with Auto DevOps can undo your changes. Also, if you change something and want to undo it by deploying again, Helm may not detect that anything changed in the first place, and thus not realize that it needs to re-apply the old config.

Introduced in GitLab 11.0.

For internal and private projects a GitLab Deploy Token will be automatically created, when Auto DevOps is enabled and the Auto DevOps settings are saved. This Deploy Token can be used for permanent access to the registry.

If the GitLab Deploy Token cannot be found, CI_REGISTRY_PASSWORD is

used. Note that CI_REGISTRY_PASSWORD is only valid during deployment.

This means that Kubernetes will be able to successfully pull the

container image during deployment but in cases where the image needs to

be pulled again, e.g. after pod eviction, Kubernetes will fail to do so

as it will be attempting to fetch the image using

CI_REGISTRY_PASSWORD.

NOTE: Note: When the GitLab Deploy Token has been manually revoked, it won't be automatically created.

Migrations

Introduced in GitLab 11.4

Database initialization and migrations for PostgreSQL can be configured to run

within the application pod by setting the project variables DB_INITIALIZE and

DB_MIGRATE respectively.

If present, DB_INITIALIZE will be run as a shell command within an

application pod as a helm post-install hook. As some applications will

not run without a successful database initialization step, GitLab will

deploy the first release without the application deployment and only the

database initialization step. After the database initialization completes,

GitLab will deploy a second release with the application deployment as

normal.

Note that a post-install hook means that if any deploy succeeds,

DB_INITIALIZE will not be processed thereafter.

If present, DB_MIGRATE will be run as a shell command within an application pod as

a helm pre-upgrade hook.

For example, in a Rails application:

DB_INITIALIZEcan be set tocd /app && RAILS_ENV=production bin/setupDB_MIGRATEcan be set tocd /app && RAILS_ENV=production bin/update

NOTE: Note:

The /app path is the directory of your project inside the docker image

as configured by

Herokuish

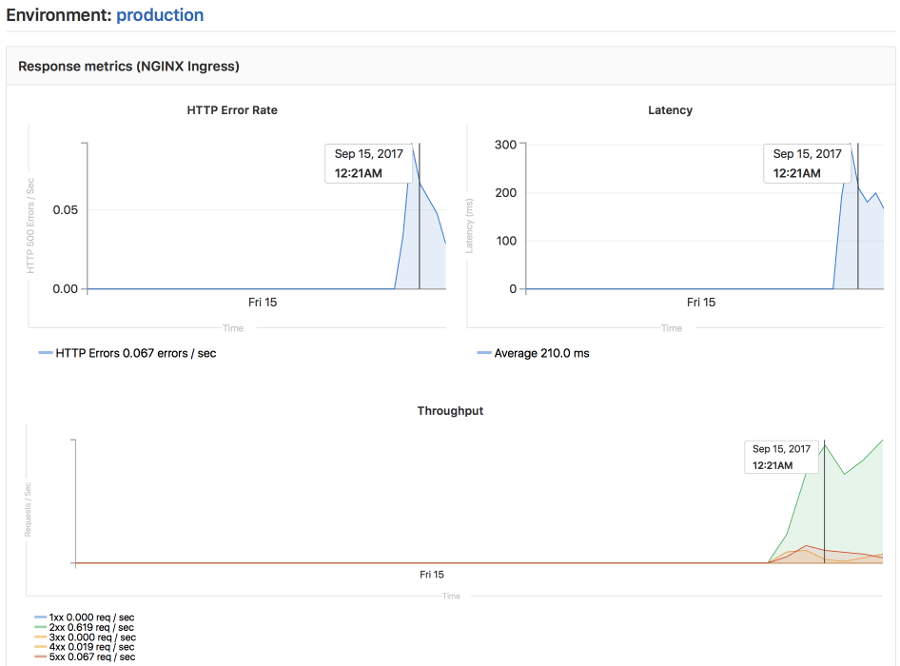

Auto Monitoring

NOTE: Note: Check the requirements for Auto Monitoring to make this stage work.

Once your application is deployed, Auto Monitoring makes it possible to monitor your application's server and response metrics right out of the box. Auto Monitoring uses Prometheus to get system metrics such as CPU and memory usage directly from Kubernetes, and response metrics such as HTTP error rates, latency, and throughput from the NGINX server.

The metrics include:

- Response Metrics: latency, throughput, error rate

- System Metrics: CPU utilization, memory utilization

In order to make use of monitoring you need to:

- Deploy Prometheus into your Kubernetes cluster

- If you would like response metrics, ensure you are running at least version 0.9.0 of NGINX Ingress and enable Prometheus metrics.

- Finally, annotate

the NGINX Ingress deployment to be scraped by Prometheus using

prometheus.io/scrape: "true"andprometheus.io/port: "10254".

To view the metrics, open the Monitoring dashboard for a deployed environment.

Customizing

While Auto DevOps provides great defaults to get you started, you can customize

almost everything to fit your needs; from custom buildpacks,

to Dockerfiles, Helm charts, or

even copying the complete CI/CD configuration

into your project to enable staging and canary deployments, and more.

Custom buildpacks

If the automatic buildpack detection fails for your project, or if you want to

use a custom buildpack, you can override the buildpack(s) using a project variable

or a .buildpacks file in your project:

- Project variable - Create a project variable

BUILDPACK_URLwith the URL of the buildpack to use. .buildpacksfile - Add a file in your project's repo called.buildpacksand add the URL of the buildpack to use on a line in the file. If you want to use multiple buildpacks, you can enter them in, one on each line.

CAUTION: Caution: Using multiple buildpacks isn't yet supported by Auto DevOps.

Custom Dockerfile

If your project has a Dockerfile in the root of the project repo, Auto DevOps

will build a Docker image based on the Dockerfile rather than using buildpacks.

This can be much faster and result in smaller images, especially if your

Dockerfile is based on Alpine.

Custom Helm Chart

Auto DevOps uses Helm to deploy your application to Kubernetes. You can override the Helm chart used by bundling up a chart into your project repo or by specifying a project variable:

- Bundled chart - If your project has a

./chartdirectory with aChart.yamlfile in it, Auto DevOps will detect the chart and use it instead of the default one. This can be a great way to control exactly how your application is deployed. - Project variable - Create a project variable

AUTO_DEVOPS_CHARTwith the URL of a custom chart to use or create two project variablesAUTO_DEVOPS_CHART_REPOSITORYwith the URL of a custom chart repository andAUTO_DEVOPS_CHARTwith the path to the chart.

Custom Helm chart per environment [PREMIUM]

You can specify the use of a custom Helm chart per environment by scoping the environment variable to the desired environment. See Limiting environment scopes of variables.

Customizing .gitlab-ci.yml

If you want to modify the CI/CD pipeline used by Auto DevOps, you can copy the Auto DevOps template into your project's repo and edit as you see fit.

Assuming that your project is new or it doesn't have a .gitlab-ci.yml file

present:

- From your project home page, either click on the "Set up CI/CD" button, or click

on the plus button and (

+), then "New file" - Pick

.gitlab-ci.ymlas the template type - Select "Auto-DevOps" from the template dropdown

- Edit the template or add any jobs needed

- Give an appropriate commit message and hit "Commit changes"

TIP: Tip: The Auto DevOps template includes useful comments to help you

customize it. For example, if you want deployments to go to a staging environment

instead of directly to a production one, you can enable the staging job by

renaming .staging to staging. Then make sure to uncomment the when key of

the production job to turn it into a manual action instead of deploying

automatically.

Using components of Auto-DevOps

If you only require a subset of the features offered by Auto-DevOps, you can include

individual Auto-DevOps jobs into your own .gitlab-ci.yml.

For example, to make use of Auto Build, you can add the following to

your .gitlab-ci.yml:

include:

- template: Jobs/Build.gitlab-ci.yml

Consult the Auto DevOps template for information on available jobs.

PostgreSQL database support

In order to support applications that require a database,

PostgreSQL is provisioned by default. The credentials to access

the database are preconfigured, but can be customized by setting the associated

variables. These credentials can be used for defining a

DATABASE_URL of the format:

postgres://user:password@postgres-host:postgres-port/postgres-database

Environment variables

The following variables can be used for setting up the Auto DevOps domain, providing a custom Helm chart, or scaling your application. PostgreSQL can also be customized, and you can easily use a custom buildpack.

| Variable | Description |

|---|---|

AUTO_DEVOPS_DOMAIN |

The Auto DevOps domain. By default, set automatically by the Auto DevOps setting. This variable is deprecated and is scheduled to be removed. Use KUBE_INGRESS_BASE_DOMAIN instead. |

AUTO_DEVOPS_CHART |

The Helm Chart used to deploy your apps; defaults to the one provided by GitLab. |

AUTO_DEVOPS_CHART_REPOSITORY |

The Helm Chart repository used to search for charts; defaults to https://charts.gitlab.io. |

AUTO_DEVOPS_CHART_REPOSITORY_NAME |

From Gitlab 11.11, this variable can be used to set the name of the helm repository; defaults to "gitlab" |

AUTO_DEVOPS_CHART_REPOSITORY_USERNAME |

From Gitlab 11.11, this variable can be used to set a username to connect to the helm repository. Defaults to no credentials. (Also set AUTO_DEVOPS_CHART_REPOSITORY_PASSWORD) |

AUTO_DEVOPS_CHART_REPOSITORY_PASSWORD |

From Gitlab 11.11, this variable can be used to set a password to connect to the helm repository. Defaults to no credentials. (Also set AUTO_DEVOPS_CHART_REPOSITORY_USERNAME) |

REPLICAS |

The number of replicas to deploy; defaults to 1. |

PRODUCTION_REPLICAS |

The number of replicas to deploy in the production environment. This takes precedence over REPLICAS; defaults to 1. |

CANARY_REPLICAS |

The number of canary replicas to deploy for Canary Deployments; defaults to 1 |

CANARY_PRODUCTION_REPLICAS |

The number of canary replicas to deploy for Canary Deployments in the production environment. This takes precedence over CANARY_REPLICAS; defaults to 1 |

ADDITIONAL_HOSTS |

Fully qualified domain names specified as a comma-separated list that are added to the ingress hosts. |

<ENVIRONMENT>_ADDITIONAL_HOSTS |

For a specific environment, the fully qualified domain names specified as a comma-separated list that are added to the ingress hosts. This takes precedence over ADDITIONAL_HOSTS. |

POSTGRES_ENABLED |

Whether PostgreSQL is enabled; defaults to "true". Set to false to disable the automatic deployment of PostgreSQL. |

POSTGRES_USER |

The PostgreSQL user; defaults to user. Set it to use a custom username. |

POSTGRES_PASSWORD |

The PostgreSQL password; defaults to testing-password. Set it to use a custom password. |

POSTGRES_DB |

The PostgreSQL database name; defaults to the value of $CI_ENVIRONMENT_SLUG. Set it to use a custom database name. |

POSTGRES_VERSION |

Tag for the postgres Docker image to use. Defaults to 9.6.2. |

BUILDPACK_URL |

The buildpack's full URL. It can point to either Git repositories or a tarball URL. For Git repositories, it is possible to point to a specific ref, for example https://github.com/heroku/heroku-buildpack-ruby.git#v142 |

SAST_CONFIDENCE_LEVEL |

The minimum confidence level of security issues you want to be reported; 1 for Low, 2 for Medium, 3 for High; defaults to 3. |

DEP_SCAN_DISABLE_REMOTE_CHECKS |

Whether remote Dependency Scanning checks are disabled; defaults to "false". Set to "true" to disable checks that send data to GitLab central servers. Read more about remote checks. |

DB_INITIALIZE |

From GitLab 11.4, this variable can be used to specify the command to run to initialize the application's PostgreSQL database. It runs inside the application pod. |

DB_MIGRATE |

From GitLab 11.4, this variable can be used to specify the command to run to migrate the application's PostgreSQL database. It runs inside the application pod. |

STAGING_ENABLED |

From GitLab 10.8, this variable can be used to define a deploy policy for staging and production environments. |

CANARY_ENABLED |

From GitLab 11.0, this variable can be used to define a deploy policy for canary environments. |

INCREMENTAL_ROLLOUT_MODE |

From GitLab 11.4, this variable, if present, can be used to enable an incremental rollout of your application for the production environment. Set to:

|

TEST_DISABLED |

From GitLab 11.0, this variable can be used to disable the test job. If the variable is present, the job will not be created. |

CODE_QUALITY_DISABLED |

From GitLab 11.0, this variable can be used to disable the codequality job. If the variable is present, the job will not be created. |

LICENSE_MANAGEMENT_DISABLED |

From GitLab 11.0, this variable can be used to disable the license_management job. If the variable is present, the job will not be created. |

SAST_DISABLED |

From GitLab 11.0, this variable can be used to disable the sast job. If the variable is present, the job will not be created. |

DEPENDENCY_SCANNING_DISABLED |

From GitLab 11.0, this variable can be used to disable the dependency_scanning job. If the variable is present, the job will not be created. |

CONTAINER_SCANNING_DISABLED |

From GitLab 11.0, this variable can be used to disable the sast:container job. If the variable is present, the job will not be created. |

REVIEW_DISABLED |

From GitLab 11.0, this variable can be used to disable the review and the manual review:stop job. If the variable is present, these jobs will not be created. |

DAST_DISABLED |

From GitLab 11.0, this variable can be used to disable the dast job. If the variable is present, the job will not be created. |

PERFORMANCE_DISABLED |

From GitLab 11.0, this variable can be used to disable the performance job. If the variable is present, the job will not be created. |

K8S_SECRET_* |

From GitLab 11.7, any variable prefixed with K8S_SECRET_ will be made available by Auto DevOps as environment variables to the deployed application. |

KUBE_INGRESS_BASE_DOMAIN |

From GitLab 11.8, this variable can be used to set a domain per cluster. See cluster domains for more information. |

ROLLOUT_RESOURCE_TYPE |

From GitLab 11.9, this variable allows specification of the resource type being deployed when using a custom helm chart. Default value is deployment. |

HELM_UPGRADE_EXTRA_ARGS |

From GitLab 11.11, this variable allows extra arguments in helm commands when deploying the application. Note that using quotes will not prevent word splitting. |

TIP: Tip: Set up the replica variables using a project variable and scale your application by just redeploying it!

CAUTION: Caution: You should not scale your application using Kubernetes directly. This can cause confusion with Helm not detecting the change, and subsequent deploys with Auto DevOps can undo your changes.

Application secret variables

Introduced in GitLab 11.7.

Some applications need to define secret variables that are

accessible by the deployed application. Auto DevOps detects variables where the key starts with

K8S_SECRET_ and make these prefixed variables available to the

deployed application, as environment variables.

To configure your application variables:

-

Go to your project's Settings > CI/CD, then expand the section called Variables.

-

Create a CI Variable, ensuring the key is prefixed with

K8S_SECRET_. For example, you can create a variable with keyK8S_SECRET_RAILS_MASTER_KEY. -

Run an Auto Devops pipeline either by manually creating a new pipeline or by pushing a code change to GitLab.

Auto DevOps pipelines will take your application secret variables to

populate a Kubernetes secret. This secret is unique per environment.

When deploying your application, the secret is loaded as environment

variables in the container running the application. Following the

example above, you can see the secret below containing the

RAILS_MASTER_KEY variable.

$ kubectl get secret production-secret -n minimal-ruby-app-54 -o yaml

apiVersion: v1

data:

RAILS_MASTER_KEY: MTIzNC10ZXN0

kind: Secret

metadata:

creationTimestamp: 2018-12-20T01:48:26Z

name: production-secret

namespace: minimal-ruby-app-54

resourceVersion: "429422"

selfLink: /api/v1/namespaces/minimal-ruby-app-54/secrets/production-secret

uid: 57ac2bfd-03f9-11e9-b812-42010a9400e4

type: Opaque

CAUTION: Caution: Variables with multiline values are not currently supported due to limitations with the current Auto DevOps scripting environment.

NOTE: Note: Environment variables are generally considered immutable in a Kubernetes pod. Therefore, if you update an application secret without changing any code then manually create a new pipeline, you will find that any running application pods will not have the updated secrets. In this case, you can either push a code update to GitLab to force the Kubernetes Deployment to recreate pods or manually delete running pods to cause Kubernetes to create new pods with updated secrets.

Advanced replica variables setup

Apart from the two replica-related variables for production mentioned above, you can also use others for different environments.

There's a very specific mapping between Kubernetes' label named track,

GitLab CI/CD environment names, and the replicas environment variable.

The general rule is: TRACK_ENV_REPLICAS. Where:

TRACK: The capitalized value of thetrackKubernetes label in the Helm Chart app definition. If not set, it will not be taken into account to the variable name.ENV: The capitalized environment name of the deploy job that is set in.gitlab-ci.yml.

That way, you can define your own TRACK_ENV_REPLICAS variables with which

you will be able to scale the pod's replicas easily.

In the example below, the environment's name is qa and it deploys the track

foo which would result in looking for the FOO_QA_REPLICAS environment

variable:

QA testing:

stage: deploy

environment:

name: qa

script:

- deploy foo

The track foo being referenced would also need to be defined in the

application's Helm chart, like:

replicaCount: 1

image:

repository: gitlab.example.com/group/project

tag: stable

pullPolicy: Always

secrets:

- name: gitlab-registry

application:

track: foo

tier: web

service:

enabled: true

name: web

type: ClusterIP

url: http://my.host.com/

externalPort: 5000

internalPort: 5000

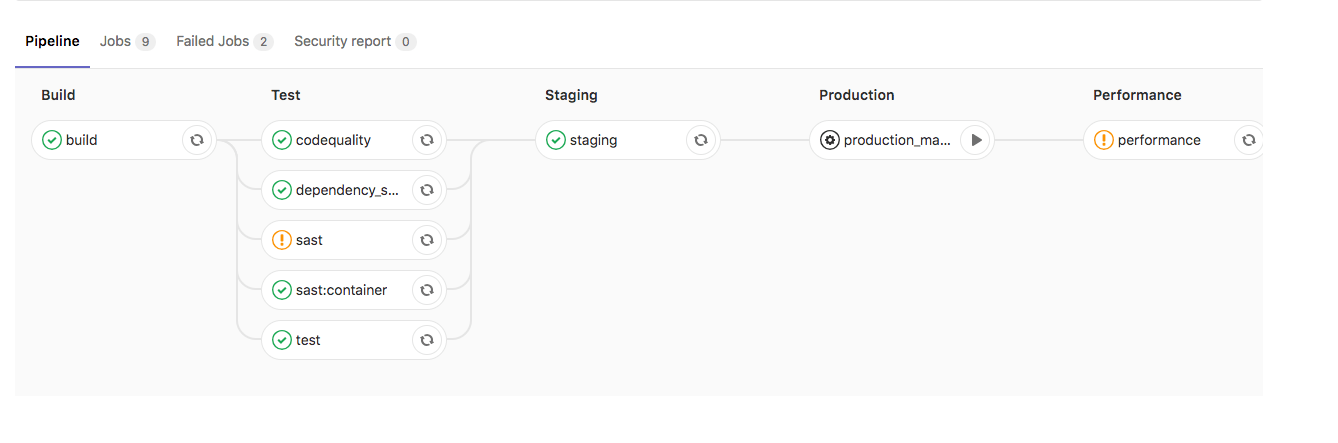

Deploy policy for staging and production environments

Introduced in GitLab 10.8.

TIP: Tip: You can also set this inside your project's settings.

The normal behavior of Auto DevOps is to use Continuous Deployment, pushing

automatically to the production environment every time a new pipeline is run

on the default branch. However, there are cases where you might want to use a

staging environment and deploy to production manually. For this scenario, the

STAGING_ENABLED environment variable was introduced.

If STAGING_ENABLED is defined in your project (e.g., set STAGING_ENABLED to

1 as a CI/CD variable), then the application will be automatically deployed

to a staging environment, and a production_manual job will be created for

you when you're ready to manually deploy to production.

Deploy policy for canary environments [PREMIUM]

Introduced in GitLab 11.0.

A canary environment can be used before any changes are deployed to production.

If CANARY_ENABLED is defined in your project (e.g., set CANARY_ENABLED to

1 as a CI/CD variable) then two manual jobs will be created:

canarywhich will deploy the application to the canary environmentproduction_manualwhich is to be used by you when you're ready to manually deploy to production.

Incremental rollout to production [PREMIUM]

Introduced in GitLab 10.8.

TIP: Tip: You can also set this inside your project's settings.

When you have a new version of your app to deploy in production, you may want to use an incremental rollout to replace just a few pods with the latest code. This will allow you to first check how the app is behaving, and later manually increasing the rollout up to 100%.

If INCREMENTAL_ROLLOUT_MODE is set to manual in your project, then instead

of the standard production job, 4 different

manual jobs

will be created:

rollout 10%rollout 25%rollout 50%rollout 100%

The percentage is based on the REPLICAS variable and defines the number of

pods you want to have for your deployment. If you say 10, and then you run

the 10% rollout job, there will be 1 new pod + 9 old ones.

To start a job, click on the play icon next to the job's name. You are not

required to go from 10% to 100%, you can jump to whatever job you want.

You can also scale down by running a lower percentage job, just before hitting

100%. Once you get to 100%, you cannot scale down, and you'd have to roll

back by redeploying the old version using the

rollback button in the

environment page.

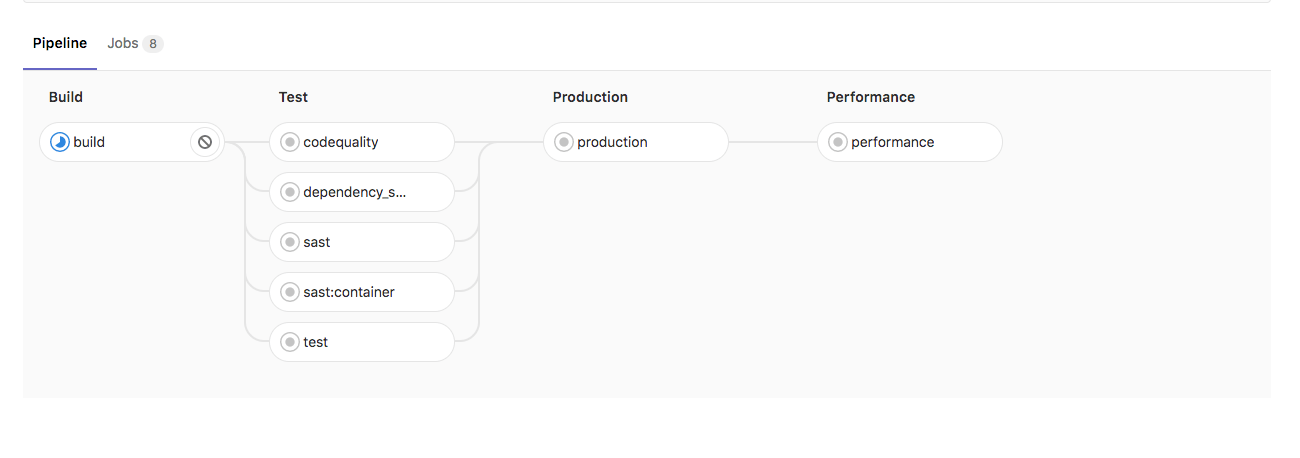

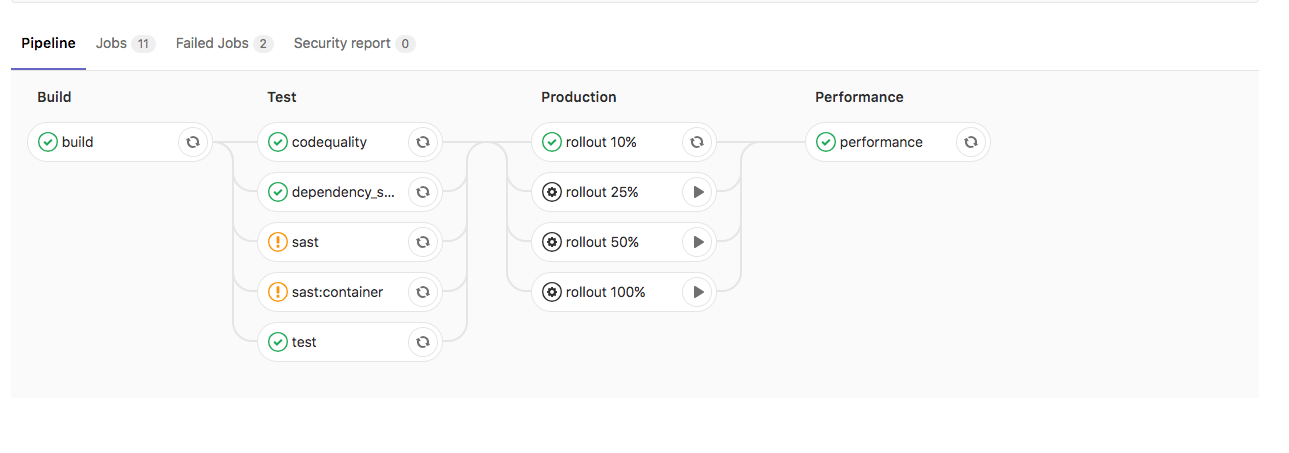

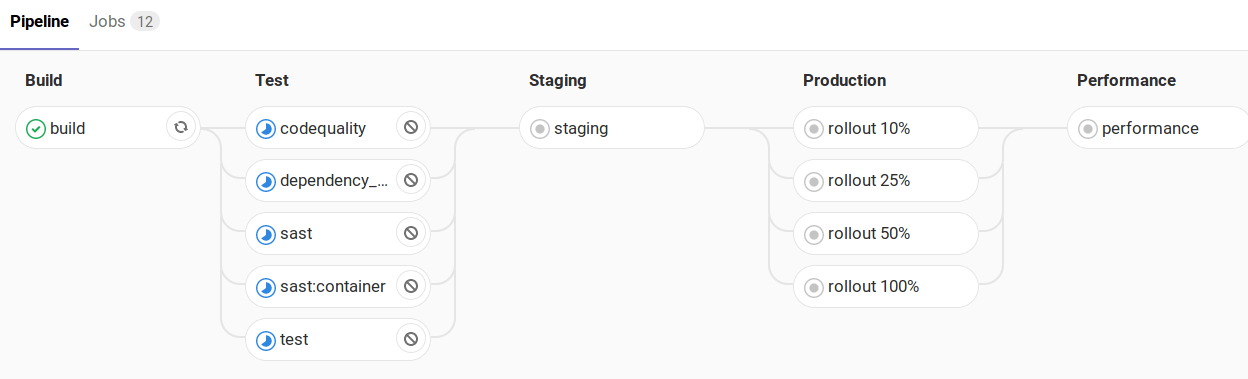

Below, you can see how the pipeline will look if the rollout or staging variables are defined.

Without INCREMENTAL_ROLLOUT_MODE and without STAGING_ENABLED:

Without INCREMENTAL_ROLLOUT_MODE and with STAGING_ENABLED:

With INCREMENTAL_ROLLOUT_MODE set to manual and without STAGING_ENABLED:

With INCREMENTAL_ROLLOUT_MODE set to manual and with STAGING_ENABLED

CAUTION: Caution:

Before GitLab 11.4 this feature was enabled by the presence of the

INCREMENTAL_ROLLOUT_ENABLED environment variable.

This configuration is deprecated and will be removed in the future.

Timed incremental rollout to production [PREMIUM]

Introduced in GitLab 11.4.

TIP: Tip: You can also set this inside your project's settings.

This configuration based on incremental rollout to production.

Everything behaves the same way, except:

- It's enabled by setting the

INCREMENTAL_ROLLOUT_MODEvariable totimed. - Instead of the standard

productionjob, the following jobs with a 5 minute delay between each are created:timed rollout 10%timed rollout 25%timed rollout 50%timed rollout 100%

Currently supported languages

NOTE: Note: Not all buildpacks support Auto Test yet, as it's a relatively new enhancement. All of Heroku's officially supported languages support it, and some third-party buildpacks as well e.g., Go, Node, Java, PHP, Python, Ruby, Gradle, Scala, and Elixir all support Auto Test, but notably the multi-buildpack does not.

As of GitLab 10.0, the supported buildpacks are:

- heroku-buildpack-multi v1.0.0

- heroku-buildpack-ruby v168

- heroku-buildpack-nodejs v99

- heroku-buildpack-clojure v77

- heroku-buildpack-python v99

- heroku-buildpack-java v53

- heroku-buildpack-gradle v23

- heroku-buildpack-scala v78

- heroku-buildpack-play v26

- heroku-buildpack-php v122

- heroku-buildpack-go v72

- heroku-buildpack-erlang fa17af9

- buildpack-nginx v8

Limitations

The following restrictions apply.

Private project support

CAUTION: Caution: Private project support in Auto DevOps is experimental.

When a project has been marked as private, GitLab's Container Registry requires authentication when downloading containers. Auto DevOps will automatically provide the required authentication information to Kubernetes, allowing temporary access to the registry. Authentication credentials will be valid while the pipeline is running, allowing for a successful initial deployment.

After the pipeline completes, Kubernetes will no longer be able to access the Container Registry. Restarting a pod, scaling a service, or other actions which require on-going access to the registry may fail. On-going secure access is planned for a subsequent release.

Troubleshooting

- Auto Build and Auto Test may fail in detecting your language/framework. There

may be no buildpack for your application, or your application may be missing the

key files the buildpack is looking for. For example, for ruby apps, you must

have a

Gemfileto be properly detected, even though it is possible to write a Ruby app without aGemfile. Try specifying a custom buildpack. - Auto Test may fail because of a mismatch between testing frameworks. In this

case, you may need to customize your

.gitlab-ci.ymlwith your test commands. - Auto Deploy will fail if GitLab can not create a Kubernetes namespace and service account for your project. For help debugging this issue, see Troubleshooting failed deployment jobs.

Disable the banner instance wide

If an administrator would like to disable the banners on an instance level, this feature can be disabled either through the console:

sudo gitlab-rails console

Then run:

Feature.get(:auto_devops_banner_disabled).enable

Or through the HTTP API with an admin access token:

curl --data "value=true" --header "PRIVATE-TOKEN: personal_access_token" https://gitlab.example.com/api/v4/features/auto_devops_banner_disabled