- Modify the `CreateOrUpdateSecret` function in `api.go` to include a

`Delete` operation for the secret

- Modify the `DeleteOrgSecret` function in `action.go` to include a

`DeleteSecret` operation for the organization

- Modify the `DeleteSecret` function in `action.go` to include a

`DeleteSecret` operation for the repository

- Modify the `v1_json.tmpl` template file to update the `operationId`

and `summary` for the `deleteSecret` operation in both the organization

and repository sections

---------

Signed-off-by: Bo-Yi Wu <appleboy.tw@gmail.com>

Just like `models/unittest`, the testing helper functions should be in a

separate package: `contexttest`

And complete the TODO:

> // TODO: move this function to other packages, because it depends on

"models" package

spec:

https://docs.github.com/en/rest/actions/secrets?apiVersion=2022-11-28#create-or-update-a-repository-secret

- Add a new route for creating or updating a secret value in a

repository

- Create a new file `routers/api/v1/repo/action.go` with the

implementation of the `CreateOrUpdateSecret` function

- Update the Swagger documentation for the `updateRepoSecret` operation

in the `v1_json.tmpl` template file

---------

Signed-off-by: Bo-Yi Wu <appleboy.tw@gmail.com>

Co-authored-by: Giteabot <teabot@gitea.io>

Fixes: #26333.

Previously, this endpoint only updates the `StatusCheckContexts` field

when `EnableStatusCheck==true`, which makes it impossible to clear the

array otherwise.

This patch uses slice `nil`-ness to decide whether to update the list of

checks. The field is ignored when either the client explicitly passes in

a null, or just omits the field from the json ([which causes

`json.Unmarshal` to leave the struct field

unchanged](https://go.dev/play/p/Z2XHOILuB1Q)). I think this is a better

measure of intent than whether the `EnableStatusCheck` flag was set,

because it matches the semantics of other field types.

Also adds a test case. I noticed that [`testAPIEditBranchProtection`

only checks the branch

name](c1c83dbaec/tests/integration/api_branch_test.go (L68))

and no other fields, so I added some extra `GET` calls and specific

checks to make sure the fields are changing properly.

I added those checks the existing integration test; is that the right

place for it?

## Archived labels

This adds the structure to allow for archived labels.

Archived labels are, just like closed milestones or projects, a medium to hide information without deleting it.

It is especially useful if there are outdated labels that should no longer be used without deleting the label entirely.

## Changes

1. UI and API have been equipped with the support to mark a label as archived

2. The time when a label has been archived will be stored in the DB

## Outsourced for the future

There's no special handling for archived labels at the moment.

This will be done in the future.

## Screenshots

Part of https://github.com/go-gitea/gitea/issues/25237

---------

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Fix #24662.

Replace #24822 and #25708 (although it has been merged)

## Background

In the past, Gitea supported issue searching with a keyword and

conditions in a less efficient way. It worked by searching for issues

with the keyword and obtaining limited IDs (as it is heavy to get all)

on the indexer (bleve/elasticsearch/meilisearch), and then querying with

conditions on the database to find a subset of the found IDs. This is

why the results could be incomplete.

To solve this issue, we need to store all fields that could be used as

conditions in the indexer and support both keyword and additional

conditions when searching with the indexer.

## Major changes

- Redefine `IndexerData` to include all fields that could be used as

filter conditions.

- Refactor `Search(ctx context.Context, kw string, repoIDs []int64,

limit, start int, state string)` to `Search(ctx context.Context, options

*SearchOptions)`, so it supports more conditions now.

- Change the data type stored in `issueIndexerQueue`. Use

`IndexerMetadata` instead of `IndexerData` in case the data has been

updated while it is in the queue. This also reduces the storage size of

the queue.

- Enhance searching with Bleve/Elasticsearch/Meilisearch, make them

fully support `SearchOptions`. Also, update the data versions.

- Keep most logic of database indexer, but remove

`issues.SearchIssueIDsByKeyword` in `models` to avoid confusion where is

the entry point to search issues.

- Start a Meilisearch instance to test it in unit tests.

- Add unit tests with almost full coverage to test

Bleve/Elasticsearch/Meilisearch indexer.

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

To avoid deadlock problem, almost database related functions should be

have ctx as the first parameter.

This PR do a refactor for some of these functions.

Before: the concept "Content string" is used everywhere. It has some

problems:

1. Sometimes it means "base64 encoded content", sometimes it means "raw

binary content"

2. It doesn't work with large files, eg: uploading a 1G LFS file would

make Gitea process OOM

This PR does the refactoring: use "ContentReader" / "ContentBase64"

instead of "Content"

This PR is not breaking because the key in API JSON is still "content":

`` ContentBase64 string `json:"content"` ``

A couple of notes:

* Future changes should refactor arguments into a struct

* This filtering only is supported by meilisearch right now

* Issue index number is bumped which will cause a re-index

Fix #25558

Extract from #22743

This PR added a repository's check when creating/deleting branches via

API. Mirror repository and archive repository cannot do that.

This adds an API for uploading and Deleting Avatars for of Users, Repos

and Organisations. I'm not sure, if this should also be added to the

Admin API.

Resolves #25344

---------

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: Giteabot <teabot@gitea.io>

Related #14180

Related #25233

Related #22639

Close #19786

Related #12763

This PR will change all the branches retrieve method from reading git

data to read database to reduce git read operations.

- [x] Sync git branches information into database when push git data

- [x] Create a new table `Branch`, merge some columns of `DeletedBranch`

into `Branch` table and drop the table `DeletedBranch`.

- [x] Read `Branch` table when visit `code` -> `branch` page

- [x] Read `Branch` table when list branch names in `code` page dropdown

- [x] Read `Branch` table when list git ref compare page

- [x] Provide a button in admin page to manually sync all branches.

- [x] Sync branches if repository is not empty but database branches are

empty when visiting pages with branches list

- [x] Use `commit_time desc` as the default FindBranch order by to keep

consistent as before and deleted branches will be always at the end.

---------

Co-authored-by: Jason Song <i@wolfogre.com>

In the process of doing a bit of automation via the API, we've

discovered a _small_ issue in the Swagger definition. We tried to create

a push mirror for a repository, but our generated client raised an

exception due to an unexpected status code.

When looking at this function:

3c7f5ed7b5/routers/api/v1/repo/mirror.go (L236-L240)

We see it defines `201 - Created` as response:

3c7f5ed7b5/routers/api/v1/repo/mirror.go (L260-L262)

But it actually returns `200 - OK`:

3c7f5ed7b5/routers/api/v1/repo/mirror.go (L373)

So I've just updated the Swagger definitions to match the code😀

---------

Co-authored-by: Giteabot <teabot@gitea.io>

1. The "web" package shouldn't depends on "modules/context" package,

instead, let each "web context" register themselves to the "web"

package.

2. The old Init/Free doesn't make sense, so simplify it

* The ctx in "Init(ctx)" is never used, and shouldn't be used that way

* The "Free" is never called and shouldn't be called because the SSPI

instance is shared

---------

Co-authored-by: Giteabot <teabot@gitea.io>

Follow up #22405

Fix #20703

This PR rewrites storage configuration read sequences with some breaks

and tests. It becomes more strict than before and also fixed some

inherit problems.

- Move storage's MinioConfig struct into setting, so after the

configuration loading, the values will be stored into the struct but not

still on some section.

- All storages configurations should be stored on one section,

configuration items cannot be overrided by multiple sections. The

prioioty of configuration is `[attachment]` > `[storage.attachments]` |

`[storage.customized]` > `[storage]` > `default`

- For extra override configuration items, currently are `SERVE_DIRECT`,

`MINIO_BASE_PATH`, `MINIO_BUCKET`, which could be configured in another

section. The prioioty of the override configuration is `[attachment]` >

`[storage.attachments]` > `default`.

- Add more tests for storages configurations.

- Update the storage documentations.

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Fixes some issues with the swagger documentation for the new multiple

files API endpoint (#24887) which were overlooked when submitting the

original PR:

1. add some missing parameter descriptions

2. set correct `required` option for required parameters

3. change endpoint description to match it full functionality (every

kind of file modification is supported, not just creating and updating)

This addressees some things from #24406 that came up after the PR was

merged. Mostly from @delvh.

---------

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: delvh <dev.lh@web.de>

This PR creates an API endpoint for creating/updating/deleting multiple

files in one API call similar to the solution provided by

[GitLab](https://docs.gitlab.com/ee/api/commits.html#create-a-commit-with-multiple-files-and-actions).

To archive this, the CreateOrUpdateRepoFile and DeleteRepoFIle functions

in files service are unified into one function supporting multiple files

and actions.

Resolves #14619

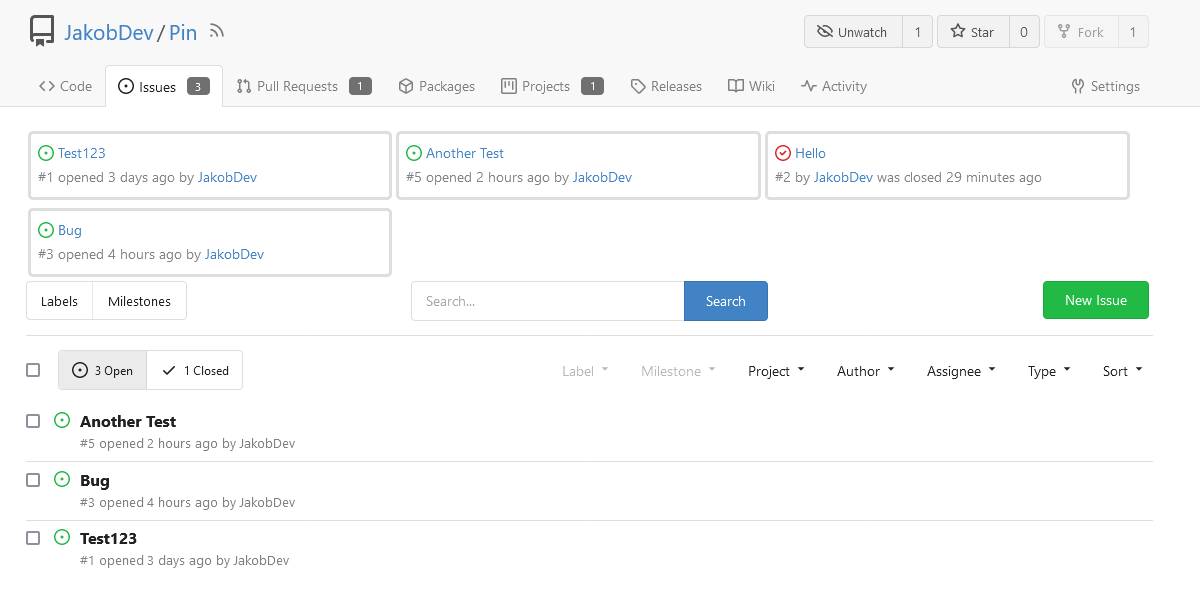

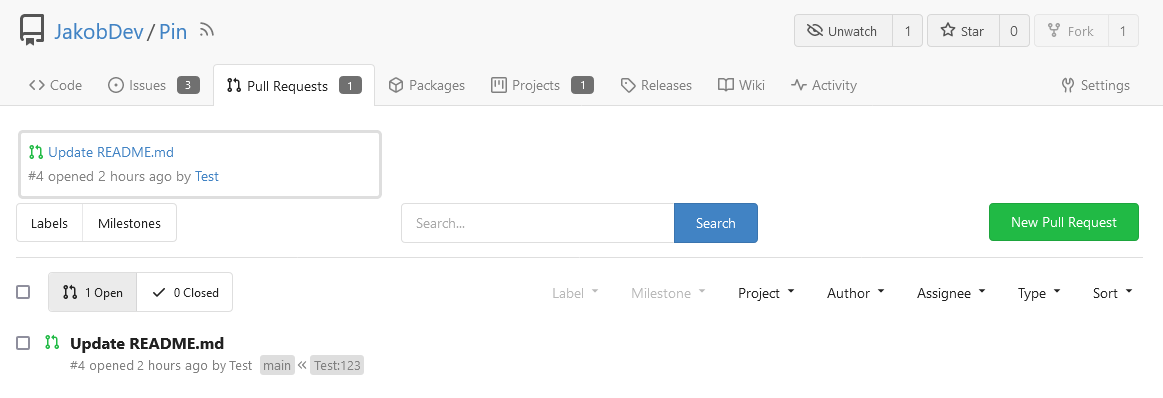

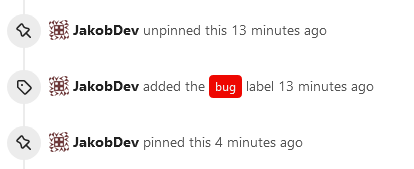

This adds the ability to pin important Issues and Pull Requests. You can

also move pinned Issues around to change their Position. Resolves #2175.

## Screenshots

The Design was mostly copied from the Projects Board.

## Implementation

This uses a new `pin_order` Column in the `issue` table. If the value is

set to 0, the Issue is not pinned. If it's set to a bigger value, the

value is the Position. 1 means it's the first pinned Issue, 2 means it's

the second one etc. This is dived into Issues and Pull requests for each

Repo.

## TODO

- [x] You can currently pin as many Issues as you want. Maybe we should

add a Limit, which is configurable. GitHub uses 3, but I prefer 6, as

this is better for bigger Projects, but I'm open for suggestions.

- [x] Pin and Unpin events need to be added to the Issue history.

- [x] Tests

- [x] Migration

**The feature itself is currently fully working, so tester who may find

weird edge cases are very welcome!**

---------

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: Giteabot <teabot@gitea.io>

Replace #16455

Close #21803

Mixing different Gitea contexts together causes some problems:

1. Unable to respond proper content when error occurs, eg: Web should

respond HTML while API should respond JSON

2. Unclear dependency, eg: it's unclear when Context is used in

APIContext, which fields should be initialized, which methods are

necessary.

To make things clear, this PR introduces a Base context, it only

provides basic Req/Resp/Data features.

This PR mainly moves code. There are still many legacy problems and

TODOs in code, leave unrelated changes to future PRs.

This PR

- [x] Move some functions from `issues.go` to `issue_stats.go` and

`issue_label.go`

- [x] Remove duplicated issue options `UserIssueStatsOption` to keep

only one `IssuesOptions`

#### Added

- API: Create a branch directly from commit on the create branch API

- Added `old_ref_name` parameter to allow creating a new branch from a

specific commit, tag, or branch.

- Deprecated `old_branch_name` parameter in favor of the new

`old_ref_name` parameter.

---------

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

The `GetAllCommits` endpoint can be pretty slow, especially in repos

with a lot of commits. The issue is that it spends a lot of time

calculating information that may not be useful/needed by the user.

The `stat` param was previously added in #21337 to address this, by

allowing the user to disable the calculating stats for each commit. But

this has two issues:

1. The name `stat` is rather misleading, because disabling `stat`

disables the Stat **and** Files. This should be separated out into two

different params, because getting a list of affected files is much less

expensive than calculating the stats

2. There's still other costly information provided that the user may not

need, such as `Verification`

This PR, adds two parameters to the endpoint, `files` and `verification`

to allow the user to explicitly disable this information when listing

commits. The default behavior is true.

1. Remove unused fields/methods in web context.

2. Make callers call target function directly instead of the light

wrapper like "IsUserRepoReaderSpecific"

3. The "issue template" code shouldn't be put in the "modules/context"

package, so move them to the service package.

---------

Co-authored-by: Giteabot <teabot@gitea.io>

The "modules/context.go" is too large to maintain.

This PR splits it to separate files, eg: context_request.go,

context_response.go, context_serve.go

This PR will help:

1. The future refactoring for Gitea's web context (eg: simplify the context)

2. Introduce proper "range request" support

3. Introduce context function

This PR only moves code, doesn't change any logic.

Due to #24409 , we can now specify '--not' when getting all commits from

a repo to exclude commits from a different branch.

When I wrote that PR, I forgot to also update the code that counts the

number of commits in the repo. So now, if the --not option is used, it

may return too many commits, which can indicate that another page of

data is available when it is not.

This PR passes --not to the commands that count the number of commits in

a repo

Don't remember why the previous decision that `Code` and `Release` are

non-disable units globally. Since now every unit include `Code` could be

disabled, maybe we should have a new rule that the repo should have at

least one unit. So any unit could be disabled.

Fixes #20960

Fixes #7525

---------

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: yp05327 <576951401@qq.com>

On the @Forgejo instance of Codeberg, we discovered that forking a repo

which is already forked now returns a 500 Internal Server Error, which

is unexpected. This is an attempt at fixing this.

The error message in the log:

~~~

2023/05/02 08:36:30 .../api/v1/repo/fork.go:147:CreateFork() [E]

[6450cb8e-113] ForkRepository: repository is already forked by user

[uname: ...., repo path: .../..., fork path: .../...]

~~~

The service that is used for forking returns a custom error message

which is not checked against.

About the order of options:

The case that the fork already exists should be more common, followed by

the case that a repo with the same name already exists for other

reasons. The case that the global repo limit is hit is probably not the

likeliest.

Co-authored-by: Otto Richter <otto@codeberg.org>

Co-authored-by: Giteabot <teabot@gitea.io>

For my specific use case, I'd like to get all commits that are on one

branch but NOT on the other branch.

For instance, I'd like to get all the commits on `Branch1` that are not

also on `master` (I.e. all commits that were made after `Branch1` was

created).

This PR adds a `not` query param that gets passed down to the `git log`

command to allow the user to exclude items from `GetAllCommits`.

See [git

documentation](https://git-scm.com/docs/git-log#Documentation/git-log.txt---not)

---------

Co-authored-by: Giteabot <teabot@gitea.io>

Close #24195

Some of the changes are taken from my another fix

f07b0de997

in #20147 (although that PR was discarded ....)

The bug is:

1. The old code doesn't handle `removedfile` event correctly

2. The old code doesn't provide attachments for type=CommentTypeReview

This PR doesn't intend to refactor the "upload" code to a perfect state

(to avoid making the review difficult), so some legacy styles are kept.

---------

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: Giteabot <teabot@gitea.io>

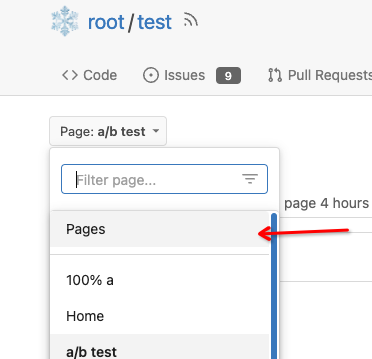

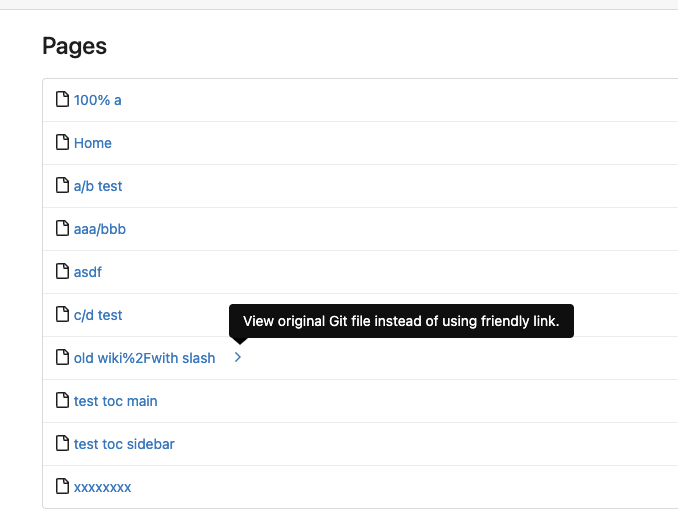

Close #7570

1. Clearly define the wiki path behaviors, see

`services/wiki/wiki_path.go` and tests

2. Keep compatibility with old contents

3. Allow to use dashes in titles, eg: "2000-01-02 Meeting record"

4. Add a "Pages" link in the dropdown, otherwise users can't go to the

Pages page easily.

5. Add a "View original git file" link in the Pages list, even if some

file names are broken, users still have a chance to edit or remove it,

without cloning the wiki repo to local.

6. Fix 500 error when the name contains prefix spaces.

This PR also introduces the ability to support sub-directories, but it

can't be done at the moment due to there are a lot of legacy wiki data,

which use "%2F" in file names.

Co-authored-by: Giteabot <teabot@gitea.io>

The _graceful_ should fail less when the `.editorconfig` file isn't

properly written, e.g. boolean values from YAML or unparseable numbers

(when a number is expected). As is... information is lost as the

_warning_ (a go-multierror.Error) is ignored. If anybody knows how to

send them to the UI as warning; any help is appreciated.

Closes #20694

Signed-off-by: Yoan Blanc <yoan@dosimple.ch>

Closes #20955

This PR adds the possibility to disable blank Issues, when the Repo has

templates. This can be done by creating the file

`.gitea/issue_config.yaml` with the content `blank_issues_enabled` in

the Repo.

Adds API endpoints to manage issue/PR dependencies

* `GET /repos/{owner}/{repo}/issues/{index}/blocks` List issues that are

blocked by this issue

* `POST /repos/{owner}/{repo}/issues/{index}/blocks` Block the issue

given in the body by the issue in path

* `DELETE /repos/{owner}/{repo}/issues/{index}/blocks` Unblock the issue

given in the body by the issue in path

* `GET /repos/{owner}/{repo}/issues/{index}/dependencies` List an

issue's dependencies

* `POST /repos/{owner}/{repo}/issues/{index}/dependencies` Create a new

issue dependencies

* `DELETE /repos/{owner}/{repo}/issues/{index}/dependencies` Remove an

issue dependency

Closes https://github.com/go-gitea/gitea/issues/15393

Closes #22115

Co-authored-by: Andrew Thornton <art27@cantab.net>

When attempting to migrate a repository via the API endpoint comments

are always included. This can create a problem if your source repository

has issues or pull requests but you do not want to import them into

Gitea that displays as something like:

> Error 500: We were unable to perform the request due to server-side

problems. 'comment references non existent IssueIndex 4

There are only two ways to resolve this:

1. Migrate using the web interface

2. Migrate using the API including at issues or pull requests.

This PR matches the behavior of the API migration router to the web

migration router.

Co-authored-by: Lauris BH <lauris@nix.lv>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Close #22934

In `/user/repos` API (and other APIs related to creating repos), user

can specify a readme template for auto init. At present, if the

specified template does not exist, a `500` will be returned . This PR

improved the logic and will return a `400` instead of `500`.

When creating attachments (issue, release, repo) the file size (being

part of the multipart file header) is passed through the chain of

creating an attachment to ensure the MinIO client can stream the file

directly instead of having to read it to memory completely at first.

Fixes #23393

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

Close #23241

Before: press Ctrl+Enter in the Code Review Form, a single comment will

be added.

After: press Ctrl+Enter in the Code Review Form, start the review with

pending comments.

The old name `is_review` is not clear, so the new code use

`pending_review` as the new name.

Co-authored-by: delvh <leon@kske.dev>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

Extract from #11669 and enhancement to #22585 to support exclusive

scoped labels in label templates

* Move label template functionality to label module

* Fix handling of color codes

* Add Advanced label template

This includes pull requests that you approved, requested changes or

commented on. Currently such pull requests are not visible in any of the

filters on /pulls, while they may need further action like merging, or

prodding the author or reviewers.

Especially when working with a large team on a repository it's helpful

to get a full overview of pull requests that may need your attention,

without having to sift through the complete list.

Close: #22910

---

I'm confused about that why does the api (`GET

/repos/{owner}/{repo}/pulls/{index}/files`) require caller to pass the

parameters `limit` and `page`.

In my case, the caller only needs to pass a `skip-to` to paging. This is

consistent with the api `GET /{owner}/{repo}/pulls/{index}/files`

So, I deleted the code related to `listOptions`

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Some bugs caused by less unit tests in fundamental packages. This PR

refactor `setting` package so that create a unit test will be easier

than before.

- All `LoadFromXXX` files has been splited as two functions, one is

`InitProviderFromXXX` and `LoadCommonSettings`. The first functions will

only include the code to create or new a ini file. The second function

will load common settings.

- It also renames all functions in setting from `newXXXService` to

`loadXXXSetting` or `loadXXXFrom` to make the function name less

confusing.

- Move `XORMLog` to `SQLLog` because it's a better name for that.

Maybe we should finally move these `loadXXXSetting` into the `XXXInit`

function? Any idea?

---------

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: delvh <dev.lh@web.de>

Add a new "exclusive" option per label. This makes it so that when the

label is named `scope/name`, no other label with the same `scope/`

prefix can be set on an issue.

The scope is determined by the last occurence of `/`, so for example

`scope/alpha/name` and `scope/beta/name` are considered to be in

different scopes and can coexist.

Exclusive scopes are not enforced by any database rules, however they

are enforced when editing labels at the models level, automatically

removing any existing labels in the same scope when either attaching a

new label or replacing all labels.

In menus use a circle instead of checkbox to indicate they function as

radio buttons per scope. Issue filtering by label ensures that only a

single scoped label is selected at a time. Clicking with alt key can be

used to remove a scoped label, both when editing individual issues and

batch editing.

Label rendering refactor for consistency and code simplification:

* Labels now consistently have the same shape, emojis and tooltips

everywhere. This includes the label list and label assignment menus.

* In label list, show description below label same as label menus.

* Don't use exactly black/white text colors to look a bit nicer.

* Simplify text color computation. There is no point computing luminance

in linear color space, as this is a perceptual problem and sRGB is

closer to perceptually linear.

* Increase height of label assignment menus to show more labels. Showing

only 3-4 labels at a time leads to a lot of scrolling.

* Render all labels with a new RenderLabel template helper function.

Label creation and editing in multiline modal menu:

* Change label creation to open a modal menu like label editing.

* Change menu layout to place name, description and colors on separate

lines.

* Don't color cancel button red in label editing modal menu.

* Align text to the left in model menu for better readability and

consistent with settings layout elsewhere.

Custom exclusive scoped label rendering:

* Display scoped label prefix and suffix with slightly darker and

lighter background color respectively, and a slanted edge between them

similar to the `/` symbol.

* In menus exclusive labels are grouped with a divider line.

---------

Co-authored-by: Yarden Shoham <hrsi88@gmail.com>

Co-authored-by: Lauris BH <lauris@nix.lv>

To avoid duplicated load of the same data in an HTTP request, we can set

a context cache to do that. i.e. Some pages may load a user from a

database with the same id in different areas on the same page. But the

code is hidden in two different deep logic. How should we share the

user? As a result of this PR, now if both entry functions accept

`context.Context` as the first parameter and we just need to refactor

`GetUserByID` to reuse the user from the context cache. Then it will not

be loaded twice on an HTTP request.

But of course, sometimes we would like to reload an object from the

database, that's why `RemoveContextData` is also exposed.

The core context cache is here. It defines a new context

```go

type cacheContext struct {

ctx context.Context

data map[any]map[any]any

lock sync.RWMutex

}

var cacheContextKey = struct{}{}

func WithCacheContext(ctx context.Context) context.Context {

return context.WithValue(ctx, cacheContextKey, &cacheContext{

ctx: ctx,

data: make(map[any]map[any]any),

})

}

```

Then you can use the below 4 methods to read/write/del the data within

the same context.

```go

func GetContextData(ctx context.Context, tp, key any) any

func SetContextData(ctx context.Context, tp, key, value any)

func RemoveContextData(ctx context.Context, tp, key any)

func GetWithContextCache[T any](ctx context.Context, cacheGroupKey string, cacheTargetID any, f func() (T, error)) (T, error)

```

Then let's take a look at how `system.GetString` implement it.

```go

func GetSetting(ctx context.Context, key string) (string, error) {

return cache.GetWithContextCache(ctx, contextCacheKey, key, func() (string, error) {

return cache.GetString(genSettingCacheKey(key), func() (string, error) {

res, err := GetSettingNoCache(ctx, key)

if err != nil {

return "", err

}

return res.SettingValue, nil

})

})

}

```

First, it will check if context data include the setting object with the

key. If not, it will query from the global cache which may be memory or

a Redis cache. If not, it will get the object from the database. In the

end, if the object gets from the global cache or database, it will be

set into the context cache.

An object stored in the context cache will only be destroyed after the

context disappeared.

Add setting to allow edits by maintainers by default, to avoid having to

often ask contributors to enable this.

This also reorganizes the pull request settings UI to improve clarity.

It was unclear which checkbox options were there to control available

merge styles and which merge styles they correspond to.

Now there is a "Merge Styles" label followed by the merge style options

with the same name as in other menus. The remaining checkboxes were

moved to the bottom, ordered rougly by typical order of operations.

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

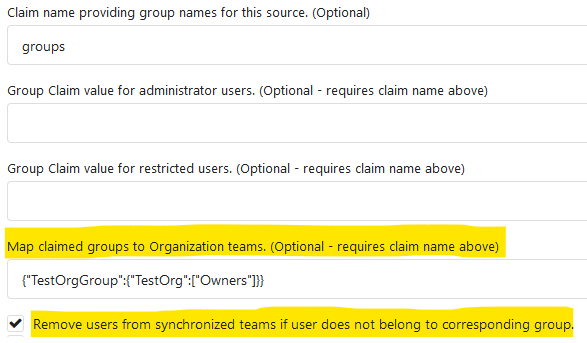

Fixes #19555

Test-Instructions:

https://github.com/go-gitea/gitea/pull/21441#issuecomment-1419438000

This PR implements the mapping of user groups provided by OIDC providers

to orgs teams in Gitea. The main part is a refactoring of the existing

LDAP code to make it usable from different providers.

Refactorings:

- Moved the router auth code from module to service because of import

cycles

- Changed some model methods to take a `Context` parameter

- Moved the mapping code from LDAP to a common location

I've tested it with Keycloak but other providers should work too. The

JSON mapping format is the same as for LDAP.

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

The `commit_id` property name is the same as equivalent functionality in

GitHub. If the action was not caused by a commit, an empty string is

used.

This can for example be used to automatically add a Resolved label to an

issue fixed by a commit, or clear it when the issue is reopened.

This PR introduce glob match for protected branch name. The separator is

`/` and you can use `*` matching non-separator chars and use `**` across

separator.

It also supports input an exist or non-exist branch name as matching

condition and branch name condition has high priority than glob rule.

Should fix #2529 and #15705

screenshots

<img width="1160" alt="image"

src="https://user-images.githubusercontent.com/81045/205651179-ebb5492a-4ade-4bb4-a13c-965e8c927063.png">

Co-authored-by: zeripath <art27@cantab.net>

After #22362, we can feel free to use transactions without

`db.DefaultContext`.

And there are still lots of models using `db.DefaultContext`, I think we

should refactor them carefully and one by one.

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Previously, there was an `import services/webhooks` inside

`modules/notification/webhook`.

This import was removed (after fighting against many import cycles).

Additionally, `modules/notification/webhook` was moved to

`modules/webhook`,

and a few structs/constants were extracted from `models/webhooks` to

`modules/webhook`.

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Push mirrors `sync_on_commit` option was added to the web interface in

v1.18.0. However, it's not added to the API. This PR updates the API

endpoint.

Fixes #22267

Also, I think this should be backported to 1.18

If user has reached the maximum limit of repositories:

- Before

- disallow create

- allow fork without limit

- This patch:

- disallow create

- disallow fork

- Add option `ALLOW_FORK_WITHOUT_MAXIMUM_LIMIT` (Default **true**) :

enable this allow user fork repositories without maximum number limit

fixed https://github.com/go-gitea/gitea/issues/21847

Signed-off-by: Xinyu Zhou <i@sourcehut.net>

Close #14601

Fix #3690

Revive of #14601.

Updated to current code, cleanup and added more read/write checks.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Signed-off-by: Andre Bruch <ab@andrebruch.com>

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: Norwin <git@nroo.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Change all license headers to comply with REUSE specification.

Fix #16132

Co-authored-by: flynnnnnnnnnn <flynnnnnnnnnn@github>

Co-authored-by: John Olheiser <john.olheiser@gmail.com>

This PR adds a context parameter to a bunch of methods. Some helper

`xxxCtx()` methods got replaced with the normal name now.

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

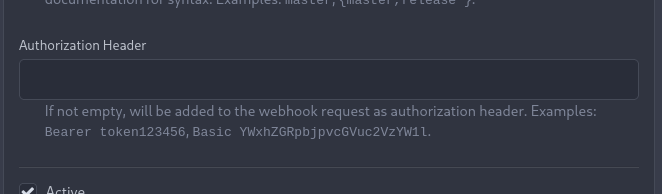

_This is a different approach to #20267, I took the liberty of adapting

some parts, see below_

## Context

In some cases, a weebhook endpoint requires some kind of authentication.

The usual way is by sending a static `Authorization` header, with a

given token. For instance:

- Matrix expects a `Bearer <token>` (already implemented, by storing the

header cleartext in the metadata - which is buggy on retry #19872)

- TeamCity #18667

- Gitea instances #20267

- SourceHut https://man.sr.ht/graphql.md#authentication-strategies (this

is my actual personal need :)

## Proposed solution

Add a dedicated encrypt column to the webhook table (instead of storing

it as meta as proposed in #20267), so that it gets available for all

present and future hook types (especially the custom ones #19307).

This would also solve the buggy matrix retry #19872.

As a first step, I would recommend focusing on the backend logic and

improve the frontend at a later stage. For now the UI is a simple

`Authorization` field (which could be later customized with `Bearer` and

`Basic` switches):

The header name is hard-coded, since I couldn't fine any usecase

justifying otherwise.

## Questions

- What do you think of this approach? @justusbunsi @Gusted @silverwind

- ~~How are the migrations generated? Do I have to manually create a new

file, or is there a command for that?~~

- ~~I started adding it to the API: should I complete it or should I

drop it? (I don't know how much the API is actually used)~~

## Done as well:

- add a migration for the existing matrix webhooks and remove the

`Authorization` logic there

_Closes #19872_

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: Gusted <williamzijl7@hotmail.com>

Co-authored-by: delvh <dev.lh@web.de>

I found myself wondering whether a PR I scheduled for automerge was

actually merged. It was, but I didn't receive a mail notification for it

- that makes sense considering I am the doer and usually don't want to

receive such notifications. But ideally I want to receive a notification

when a PR was merged because I scheduled it for automerge.

This PR implements exactly that.

The implementation works, but I wonder if there's a way to avoid passing

the "This PR was automerged" state down so much. I tried solving this

via the database (checking if there's an automerge scheduled for this PR

when sending the notification) but that did not work reliably, probably

because sending the notification happens async and the entry might have

already been deleted. My implementation might be the most

straightforward but maybe not the most elegant.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lauris BH <lauris@nix.lv>

Co-authored-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Fixes an 500 error/panic if using the changed PR files API with pages

that should return empty lists because there are no items anymore.

`start-end` is then < 0 which ends in panic.

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: delvh <dev.lh@web.de>

At the moment a repository reference is needed for webhooks. With the

upcoming package PR we need to send webhooks without a repository

reference. For example a package is uploaded to an organization. In

theory this enables the usage of webhooks for future user actions.

This PR removes the repository id from `HookTask` and changes how the

hooks are processed (see `services/webhook/deliver.go`). In a follow up

PR I want to remove the usage of the `UniqueQueue´ and replace it with a

normal queue because there is no reason to be unique.

Co-authored-by: 6543 <6543@obermui.de>

Fixes #21379

The commits are capped by `setting.UI.FeedMaxCommitNum` so

`len(commits)` is not the correct number. So this PR adds a new

`TotalCommits` field.

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Calls to ToCommit are very slow due to fetching diffs, analyzing files.

This patch lets us supply `stat` as false to speed fetching a commit

when we don't need the diff.

/v1/repo/commits has a default `stat` set as true now. Set to false to

experience fetching thousands of commits per second instead of 2-5 per

second.

This adds an api endpoint `/files` to PRs that allows to get a list of changed files.

built upon #18228, reviews there are included

closes https://github.com/go-gitea/gitea/issues/654

Co-authored-by: Anton Bracke <anton@ju60.de>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

This PR would presumably

Fix #20522

Fix #18773

Fix #19069

Fix #21077

Fix #13622

-----

1. Check whether unit type is currently enabled

2. Check if it _will_ be enabled via opt

3. Allow modification as necessary

Signed-off-by: jolheiser <john.olheiser@gmail.com>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: 6543 <6543@obermui.de>

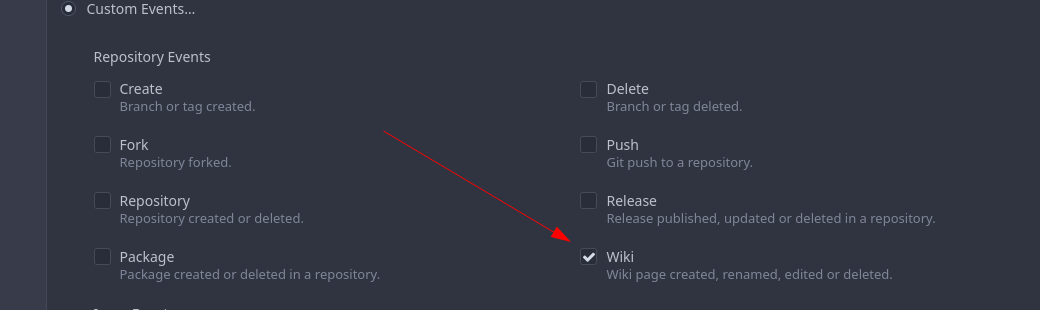

Add support for triggering webhook notifications on wiki changes.

This PR contains frontend and backend for webhook notifications on wiki actions (create a new page, rename a page, edit a page and delete a page). The frontend got a new checkbox under the Custom Event -> Repository Events section. There is only one checkbox for create/edit/rename/delete actions, because it makes no sense to separate it and others like releases or packages follow the same schema.

The actions itself are separated, so that different notifications will be executed (with the "action" field). All the webhook receivers implement the new interface method (Wiki) and the corresponding tests.

When implementing this, I encounter a little bug on editing a wiki page. Creating and editing a wiki page is technically the same action and will be handled by the ```updateWikiPage``` function. But the function need to know if it is a new wiki page or just a change. This distinction is done by the ```action``` parameter, but this will not be sent by the frontend (on form submit). This PR will fix this by adding the ```action``` parameter with the values ```_new``` or ```_edit```, which will be used by the ```updateWikiPage``` function.

I've done integration tests with matrix and gitea (http).

Fix #16457

Signed-off-by: Aaron Fischer <mail@aaron-fischer.net>

When migrating add several more important sanity checks:

* SHAs must be SHAs

* Refs must be valid Refs

* URLs must be reasonable

Signed-off-by: Andrew Thornton <art27@cantab.net>

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: techknowlogick <matti@mdranta.net>

The webhook payload should use the right ref when it‘s specified in the testing request.

The compare URL should not be empty, a URL like `compare/A...A` seems useless in most cases but is helpful when testing.

* fix hard-coded timeout and error panic in API archive download endpoint

This commit updates the `GET /api/v1/repos/{owner}/{repo}/archive/{archive}`

endpoint which prior to this PR had a couple of issues.

1. The endpoint had a hard-coded 20s timeout for the archiver to complete after

which a 500 (Internal Server Error) was returned to client. For a scripted

API client there was no clear way of telling that the operation timed out and

that it should retry.

2. Whenever the timeout _did occur_, the code used to panic. This was caused by

the API endpoint "delegating" to the same call path as the web, which uses a

slightly different way of reporting errors (HTML rather than JSON for

example).

More specifically, `api/v1/repo/file.go#GetArchive` just called through to

`web/repo/repo.go#Download`, which expects the `Context` to have a `Render`

field set, but which is `nil` for API calls. Hence, a `nil` pointer error.

The code addresses (1) by dropping the hard-coded timeout. Instead, any

timeout/cancelation on the incoming `Context` is used.

The code addresses (2) by updating the API endpoint to use a separate call path

for the API-triggered archive download. This avoids producing HTML-errors on

errors (it now produces JSON errors).

Signed-off-by: Peter Gardfjäll <peter.gardfjall.work@gmail.com>

Add code to test if GetAttachmentByID returns an ErrAttachmentNotExist error

and return NotFound instead of InternalServerError

Fix #20884

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

The use of `--follow` makes getting these commits very slow on large repositories

as it results in searching the whole commit tree for a blob.

Now as nice as the results of `--follow` are, I am uncertain whether it is really

of sufficient importance to keep around.

Fix #20764

Signed-off-by: Andrew Thornton <art27@cantab.net>

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

- Add a new push mirror to specific repository

- Sync now ( send all the changes to the configured push mirrors )

- Get list of all push mirrors of a repository

- Get a push mirror by ID

- Delete push mirror by ID

Signed-off-by: Mohamed Sekour <mohamed.sekour@exfo.com>

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: zeripath <art27@cantab.net>

The LastCommitCache code is a little complex and there is unnecessary

duplication between the gogit and nogogit variants.

This PR adds the LastCommitCache as a field to the git.Repository and

pre-creates it in the ReferencesGit helpers etc. There has been some

simplification and unification of the variant code.

Signed-off-by: Andrew Thornton <art27@cantab.net>

When you create a new release(e.g. via Tea) and specify a tag that already exists on

the repository, Gitea will instead use the `UpdateRelease`

functionality. However it currently doesn't set the Target field. This

PR fixes that.

Support synchronizing with the push mirrors whenever new commits are pushed or synced from pull mirror.

Related Issues: #18220

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

* Check if project has the same repository id with issue when assign project to issue

* Check if issue's repository id match project's repository id

* Add more permission checking

* Remove invalid argument

* Fix errors

* Add generic check

* Remove duplicated check

* Return error + add check for new issues

* Apply suggestions from code review

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

Co-authored-by: Gusted <williamzijl7@hotmail.com>

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

Co-authored-by: 6543 <6543@obermui.de>

* Move access and repo permission to models/perm/access

* fix test

* fix git test

* Move functions sequence

* Some improvements per @KN4CK3R and @delvh

* Move issues related code to models/issues

* Move some issues related sub package

* Merge

* Fix test

* Fix test

* Fix test

* Fix test

* Rename some files

* Move access and repo permission to models/perm/access

* fix test

* Move some git related files into sub package models/git

* Fix build

* fix git test

* move lfs to sub package

* move more git related functions to models/git

* Move functions sequence

* Some improvements per @KN4CK3R and @delvh

* Move some repository related code into sub package

* Move more repository functions out of models

* Fix lint

* Some performance optimization for webhooks and others

* some refactors

* Fix lint

* Fix

* Update modules/repository/delete.go

Co-authored-by: delvh <dev.lh@web.de>

* Fix test

* Merge

* Fix test

* Fix test

* Fix test

* Fix test

Co-authored-by: delvh <dev.lh@web.de>

* Add LFS API

* Update routers/api/v1/repo/file.go

Co-authored-by: Gusted <williamzijl7@hotmail.com>

* Apply suggestions

* Apply suggestions

* Update routers/api/v1/repo/file.go

Co-authored-by: Gusted <williamzijl7@hotmail.com>

* Report errors

* ADd test

* Use own repo for test

* Use different repo name

* Improve handling

* Slight restructures

1. Avoid reading the blob data multiple times

2. Ensure that caching is only checked when about to serve the blob/lfs

3. Avoid nesting by returning early

4. Make log message a bit more clear

5. Ensure that the dataRc is closed by defer when passed to ServeData

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Gusted <williamzijl7@hotmail.com>

Co-authored-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Although the use of LastModified dates for caching of git objects should be

discouraged (as it is not native to git - and there are a LOT of ways this

could be incorrect) - LastModified dates can be a helpful somewhat more human

way of caching for simple cases.

This PR adds this header and handles the If-Modified-Since header to the /raw/

routes.

Fix #18354

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: 6543 <6543@obermui.de>

* Fix indention

Signed-off-by: kolaente <k@knt.li>

* Add option to merge a pr right now without waiting for the checks to succeed

Signed-off-by: kolaente <k@knt.li>

* Fix lint

Signed-off-by: kolaente <k@knt.li>

* Add scheduled pr merge to tables used for testing

Signed-off-by: kolaente <k@knt.li>

* Add status param to make GetPullRequestByHeadBranch reusable

Signed-off-by: kolaente <k@knt.li>

* Move "Merge now" to a seperate button to make the ui clearer

Signed-off-by: kolaente <k@knt.li>

* Update models/scheduled_pull_request_merge.go

Co-authored-by: 赵智超 <1012112796@qq.com>

* Update web_src/js/index.js

Co-authored-by: 赵智超 <1012112796@qq.com>

* Update web_src/js/index.js

Co-authored-by: 赵智超 <1012112796@qq.com>

* Re-add migration after merge

* Fix frontend lint

* Fix version compare

* Add vendored dependencies

* Add basic tets

* Make sure the api route is capable of scheduling PRs for merging

* Fix comparing version

* make vendor

* adopt refactor

* apply suggestion: User -> Doer

* init var once

* Fix Test

* Update templates/repo/issue/view_content/comments.tmpl

* adopt

* nits

* next

* code format

* lint

* use same name schema; rm CreateUnScheduledPRToAutoMergeComment

* API: can not create schedule twice

* Add TestGetBranchNamesForSha

* nits

* new go routine for each pull to merge

* Update models/pull.go

Co-authored-by: a1012112796 <1012112796@qq.com>

* Update models/scheduled_pull_request_merge.go

Co-authored-by: a1012112796 <1012112796@qq.com>

* fix & add renaming sugestions

* Update services/automerge/pull_auto_merge.go

Co-authored-by: a1012112796 <1012112796@qq.com>

* fix conflict relicts

* apply latest refactors

* fix: migration after merge

* Update models/error.go

Co-authored-by: delvh <dev.lh@web.de>

* Update options/locale/locale_en-US.ini

Co-authored-by: delvh <dev.lh@web.de>

* Update options/locale/locale_en-US.ini

Co-authored-by: delvh <dev.lh@web.de>

* adapt latest refactors

* fix test

* use more context

* skip potential edgecases

* document func usage

* GetBranchNamesForSha() -> GetRefsBySha()

* start refactoring

* ajust to new changes

* nit

* docu nit

* the great check move

* move checks for branchprotection into own package

* resolve todo now ...

* move & rename

* unexport if posible

* fix

* check if merge is allowed before merge on scheduled pull

* debugg

* wording

* improve SetDefaults & nits

* NotAllowedToMerge -> DisallowedToMerge

* fix test

* merge files

* use package "errors"

* merge files

* add string names

* other implementation for gogit

* adapt refactor

* more context for models/pull.go

* GetUserRepoPermission use context

* more ctx

* use context for loading pull head/base-repo

* more ctx

* more ctx

* models.LoadIssueCtx()

* models.LoadIssueCtx()

* Handle pull_service.Merge in one DB transaction

* add TODOs

* next

* next

* next

* more ctx

* more ctx

* Start refactoring structure of old pull code ...

* move code into new packages

* shorter names ... and finish **restructure**

* Update models/branches.go

Co-authored-by: zeripath <art27@cantab.net>

* finish UpdateProtectBranch

* more and fix

* update datum

* template: use "svg" helper

* rename prQueue 2 prPatchCheckerQueue

* handle automerge in queue

* lock pull on git&db actions ...

* lock pull on git&db actions ...

* add TODO notes

* the regex

* transaction in tests

* GetRepositoryByIDCtx

* shorter table name and lint fix

* close transaction bevore notify

* Update models/pull.go

* next

* CheckPullMergable check all branch protections!

* Update routers/web/repo/pull.go

* CheckPullMergable check all branch protections!

* Revert "PullService lock via pullID (#19520)" (for now...)

This reverts commit 6cde7c9159a5ea75a10356feb7b8c7ad4c434a9a.

* Update services/pull/check.go

* Use for a repo action one database transaction

* Apply suggestions from code review

* Apply suggestions from code review

Co-authored-by: delvh <dev.lh@web.de>

* Update services/issue/status.go

Co-authored-by: delvh <dev.lh@web.de>

* Update services/issue/status.go

Co-authored-by: delvh <dev.lh@web.de>

* use db.WithTx()

* gofmt

* make pr.GetDefaultMergeMessage() context aware

* make MergePullRequestForm.SetDefaults context aware

* use db.WithTx()

* pull.SetMerged only with context

* fix deadlock in `test-sqlite\#TestAPIBranchProtection`

* dont forget templates

* db.WithTx allow to set the parentCtx

* handle db transaction in service packages but not router

* issue_service.ChangeStatus just had caused another deadlock :/

it has to do something with how notification package is handled

* if we merge a pull in one database transaktion, we get a lock, because merge infoce internal api that cant handle open db sessions to the same repo

* ajust to current master

* Apply suggestions from code review

Co-authored-by: delvh <dev.lh@web.de>

* dont open db transaction in router

* make generate-swagger

* one _success less

* wording nit

* rm

* adapt

* remove not needed test files

* rm less diff & use attr in JS

* ...

* Update services/repository/files/commit.go

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

* ajust db schema for PullAutoMerge

* skip broken pull refs

* more context in error messages

* remove webUI part for another pull

* remove more WebUI only parts

* API: add CancleAutoMergePR

* Apply suggestions from code review

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

* fix lint

* Apply suggestions from code review

* cancle -> cancel

Co-authored-by: delvh <dev.lh@web.de>

* change queue identifyer

* fix swagger

* prevent nil issue

* fix and dont drop error

* as per @zeripath

* Update integrations/git_test.go

Co-authored-by: delvh <dev.lh@web.de>

* Update integrations/git_test.go

Co-authored-by: delvh <dev.lh@web.de>

* more declarative integration tests (dedup code)

* use assert.False/True helper

Co-authored-by: 赵智超 <1012112796@qq.com>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Targeting #14936, #15332

Adds a collaborator permissions API endpoint according to GitHub API: https://docs.github.com/en/rest/collaborators/collaborators#get-repository-permissions-for-a-user to retrieve a collaborators permissions for a specific repository.

### Checks the repository permissions of a collaborator.

`GET` `/repos/{owner}/{repo}/collaborators/{collaborator}/permission`

Possible `permission` values are `admin`, `write`, `read`, `owner`, `none`.

```json

{

"permission": "admin",

"role_name": "admin",

"user": {}

}

```

Where `permission` and `role_name` hold the same `permission` value and `user` is filled with the user API object. Only admins are allowed to use this API endpoint.

* Don't error when branch's commit doesn't exist

- If one of the branches no longer exists, don't throw an error, it's possible that the branch was destroyed during the process. Simply skip it and disregard it.

- Resolves #19541

* Don't send empty objects

* Use more minimal approach

Adds a feature [like GitHub has](https://docs.github.com/en/pull-requests/collaborating-with-pull-requests/proposing-changes-to-your-work-with-pull-requests/creating-a-pull-request-from-a-fork) (step 7).

If you create a new PR from a forked repo, you can select (and change later, but only if you are the PR creator/poster) the "Allow edits from maintainers" option.

Then users with write access to the base branch get more permissions on this branch:

* use the update pull request button

* push directly from the command line (`git push`)

* edit/delete/upload files via web UI

* use related API endpoints

You can't merge PRs to this branch with this enabled, you'll need "full" code write permissions.

This feature has a pretty big impact on the permission system. I might forgot changing some things or didn't find security vulnerabilities. In this case, please leave a review or comment on this PR.

Closes #17728

Co-authored-by: 6543 <6543@obermui.de>

* Improve dashboard's repo list performance

- Avoid a lot of database lookups for all the repo's, by adding a

undocumented "minimal" mode for this specific task, which returns the

data that's only needed by this list which doesn't require any database

lookups.

- Makes fetching these list faster.

- Less CPU overhead when a user visits home page.

* Refactor javascript code + fix Fork icon

- Use async in the function so we can use `await`.

- Remove `archivedFilter` check for count, as it doesn't make sense to

show the count of repos when you can't even see them(as they are

filited away).

* Add `count_only`

* Remove uncessary code

* Improve comment

Co-authored-by: delvh <dev.lh@web.de>

* Update web_src/js/components/DashboardRepoList.js

Co-authored-by: delvh <dev.lh@web.de>

* Update web_src/js/components/DashboardRepoList.js

Co-authored-by: delvh <dev.lh@web.de>

* By default apply minimal mode

* Remove `minimal` paramater

* Refactor count header

* Simplify init

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: zeripath <art27@cantab.net>

When a mirror repo interval is updated by the UI it is rescheduled with that interval

however the API does not do this. The API also lacks the enable_prune option.

This PR adds this functionality in to the API Edit Repo endpoint.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Reusing `/api/v1` from Gitea UI Pages have pros and cons.

Pros:

1) Less code copy

Cons:

1) API/v1 have to support shared session with page requests.

2) You need to consider for each other when you want to change something about api/v1 or page.

This PR moves all dependencies to API/v1 from UI Pages.

Partially replace #16052

* An attempt to sync a non-mirror repo must give 400 (Bad Request)

* add missing return statement

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

This make checks in one single place so they dont differ and maintainer can not forget a check in one place while adding it to the other .... ( as it's atm )

Fix:

* The API does ignore issue dependencies where Web does not

* The API checks if "IsSignedIfRequired" where Web does not - UI probably do but nothing will some to craft custom requests

* Default merge message is crafted a bit different between API and Web if not set on specific cases ...