Fixes #21379

The commits are capped by `setting.UI.FeedMaxCommitNum` so

`len(commits)` is not the correct number. So this PR adds a new

`TotalCommits` field.

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

When merge was changed to run in the background context, the db updates

were still running in request context. This means that the merge could

be successful but the db not be updated.

This PR changes both these to run in the hammer context, this is not

complete rollback protection but it's much better.

Fix #21332

Signed-off-by: Andrew Thornton <art27@cantab.net>

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

We should only log CheckPath errors if they are not simply due to

context cancellation - and we should add a little more context to the

error message.

Fix #20709

Signed-off-by: Andrew Thornton <art27@cantab.net>

Close #20315 (fix the panic when parsing invalid input), Speed up #20231 (use ls-tree without size field)

Introduce ListEntriesRecursiveFast (ls-tree without size) and ListEntriesRecursiveWithSize (ls-tree with size)

Only load SECRET_KEY and INTERNAL_TOKEN if they exist.

Never write the config file if the keys do not exist, which was only a fallback for Gitea upgraded from < 1.5

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

This is useful in scenarios where the reverse proxy may have knowledge

of user emails, but does not know about usernames set on gitea,

as in the feature request in #19948.

I tested this by setting up a fresh gitea install with one user `mhl`

and email `m.hasnain.lakhani@gmail.com`. I then created a private repo,

and configured gitea to allow reverse proxy authentication.

Via curl I confirmed that these two requests now work and return 200s:

curl http://localhost:3000/mhl/private -I --header "X-Webauth-User: mhl"

curl http://localhost:3000/mhl/private -I --header "X-Webauth-Email: m.hasnain.lakhani@gmail.com"

Before this commit, the second request did not work.

I also verified that if I provide an invalid email or user,

a 404 is correctly returned as before

Closes #19948

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: 6543 <6543@obermui.de>

The behaviour of `PreventSurroundingPre` has changed in

https://github.com/alecthomas/chroma/pull/618 so that apparently it now

causes line wrapper tags to be no longer emitted, but we need some form

of indication to split the HTML into lines, so I did what

https://github.com/yuin/goldmark-highlighting/pull/33 did and added the

`nopWrapper`.

Maybe there are more elegant solutions but for some reason, just

splitting the HTML string on `\n` did not work.

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Both allow only limited characters. If you input more, you will get a error

message. So it make sense to limit the characters of the input fields.

Slightly relax the MaxSize of repo's Description and Website

If you are create a new new branch while viewing file or directory, you

get redirected to the root of the repo. With this PR, you keep your

current path instead of getting redirected to the repo root.

The `go-licenses` make task introduced in #21034 is being run on make vendor

and occasionally causes an empty go-licenses file if the vendors need to

change. This should be moved to the generate task as it is a generated file.

Now because of this change we also need to split generation into two separate

steps:

1. `generate-backend`

2. `generate-frontend`

In the future it would probably be useful to make `generate-swagger` part of `generate-frontend` but it's not tolerated with our .drone.yml

Ref #21034

Signed-off-by: Andrew Thornton <art27@cantab.net>

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: delvh <dev.lh@web.de>

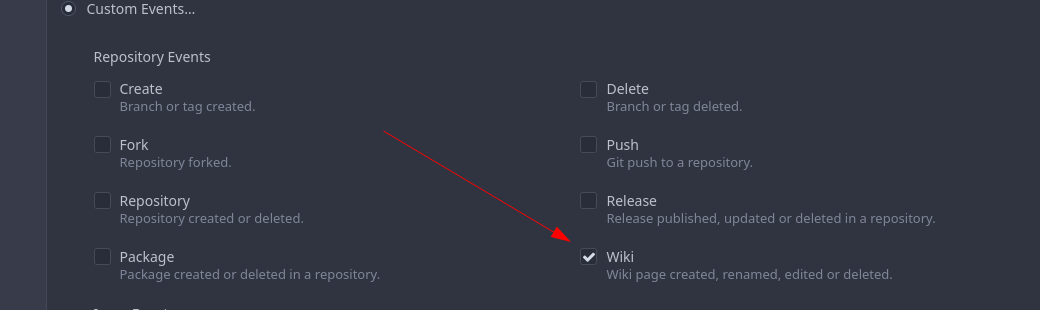

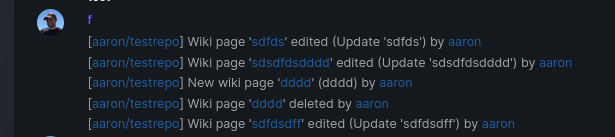

Add support for triggering webhook notifications on wiki changes.

This PR contains frontend and backend for webhook notifications on wiki actions (create a new page, rename a page, edit a page and delete a page). The frontend got a new checkbox under the Custom Event -> Repository Events section. There is only one checkbox for create/edit/rename/delete actions, because it makes no sense to separate it and others like releases or packages follow the same schema.

The actions itself are separated, so that different notifications will be executed (with the "action" field). All the webhook receivers implement the new interface method (Wiki) and the corresponding tests.

When implementing this, I encounter a little bug on editing a wiki page. Creating and editing a wiki page is technically the same action and will be handled by the ```updateWikiPage``` function. But the function need to know if it is a new wiki page or just a change. This distinction is done by the ```action``` parameter, but this will not be sent by the frontend (on form submit). This PR will fix this by adding the ```action``` parameter with the values ```_new``` or ```_edit```, which will be used by the ```updateWikiPage``` function.

I've done integration tests with matrix and gitea (http).

Fix #16457

Signed-off-by: Aaron Fischer <mail@aaron-fischer.net>

A testing cleanup.

This pull request replaces `os.MkdirTemp` with `t.TempDir`. We can use the `T.TempDir` function from the `testing` package to create temporary directory. The directory created by `T.TempDir` is automatically removed when the test and all its subtests complete.

This saves us at least 2 lines (error check, and cleanup) on every instance, or in some cases adds cleanup that we forgot.

Reference: https://pkg.go.dev/testing#T.TempDir

```go

func TestFoo(t *testing.T) {

// before

tmpDir, err := os.MkdirTemp("", "")

require.NoError(t, err)

defer os.RemoveAll(tmpDir)

// now

tmpDir := t.TempDir()

}

```

Signed-off-by: Eng Zer Jun <engzerjun@gmail.com>

When migrating add several more important sanity checks:

* SHAs must be SHAs

* Refs must be valid Refs

* URLs must be reasonable

Signed-off-by: Andrew Thornton <art27@cantab.net>

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: techknowlogick <matti@mdranta.net>

There are a lot of go dependencies that appear old and we should update them.

The following packages have been updated:

* codeberg.org/gusted/mcaptcha

* github.com/markbates/goth

* github.com/buildkite/terminal-to-html

* github.com/caddyserver/certmagic

* github.com/denisenkom/go-mssqldb

* github.com/duo-labs/webauthn

* github.com/editorconfig/editorconfig-core-go/v2

* github.com/felixge/fgprof

* github.com/gliderlabs/ssh

* github.com/go-ap/activitypub

* github.com/go-git/go-git/v5

* github.com/go-ldap/ldap/v3

* github.com/go-swagger/go-swagger

* github.com/go-testfixtures/testfixtures/v3

* github.com/golang-jwt/jwt/v4

* github.com/klauspost/compress

* github.com/lib/pq

* gitea.com/lunny/dingtalk_webhook - instead of github.com

* github.com/mattn/go-sqlite3

* github/matn/go-isatty

* github.com/minio/minio-go/v7

* github.com/niklasfasching/go-org

* github.com/prometheus/client_golang

* github.com/stretchr/testify

* github.com/unrolled/render

* github.com/xanzy/go-gitlab

* gopkg.in/ini.v1

Signed-off-by: Andrew Thornton <art27@cantab.net>

* fix hard-coded timeout and error panic in API archive download endpoint

This commit updates the `GET /api/v1/repos/{owner}/{repo}/archive/{archive}`

endpoint which prior to this PR had a couple of issues.

1. The endpoint had a hard-coded 20s timeout for the archiver to complete after

which a 500 (Internal Server Error) was returned to client. For a scripted

API client there was no clear way of telling that the operation timed out and

that it should retry.

2. Whenever the timeout _did occur_, the code used to panic. This was caused by

the API endpoint "delegating" to the same call path as the web, which uses a

slightly different way of reporting errors (HTML rather than JSON for

example).

More specifically, `api/v1/repo/file.go#GetArchive` just called through to

`web/repo/repo.go#Download`, which expects the `Context` to have a `Render`

field set, but which is `nil` for API calls. Hence, a `nil` pointer error.

The code addresses (1) by dropping the hard-coded timeout. Instead, any

timeout/cancelation on the incoming `Context` is used.

The code addresses (2) by updating the API endpoint to use a separate call path

for the API-triggered archive download. This avoids producing HTML-errors on

errors (it now produces JSON errors).

Signed-off-by: Peter Gardfjäll <peter.gardfjall.work@gmail.com>

The recovery, API, Web and package frameworks all create their own HTML

Renderers. This increases the memory requirements of Gitea

unnecessarily with duplicate templates being kept in memory.

Further the reloading framework in dev mode for these involves locking

and recompiling all of the templates on each load. This will potentially

hide concurrency issues and it is inefficient.

This PR stores the templates renderer in the context and stores this

context in the NormalRoutes, it then creates a fsnotify.Watcher

framework to watch files.

The watching framework is then extended to the mailer templates which

were previously not being reloaded in dev.

Then the locales are simplified to a similar structure.

Fix #20210

Fix #20211

Fix #20217

Signed-off-by: Andrew Thornton <art27@cantab.net>

Some Migration Downloaders provide re-writing of CloneURLs that may point to

unallowed urls. Recheck after the CloneURL is rewritten.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Some repositories do not have the PullRequest unit present in their configuration

and unfortunately the way that IsUserAllowedToUpdate currently works assumes

that this is an error instead of just returning false.

This PR simply swallows this error allowing the function to return false.

Fix #20621

Signed-off-by: Andrew Thornton <art27@cantab.net>

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: Lauris BH <lauris@nix.lv>

This adds support for getting the user's full name from the reverse

proxy in addition to username and email.

Tested locally with caddy serving as reverse proxy with Tailscale

authentication.

Signed-off-by: Will Norris <will@tailscale.com>

Signed-off-by: Will Norris <will@tailscale.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

This PR rewrites the invisible unicode detection algorithm to more

closely match that of the Monaco editor on the system. It provides a

technique for detecting ambiguous characters and relaxes the detection

of combining marks.

Control characters are in addition detected as invisible in this

implementation whereas they are not on monaco but this is related to

font issues.

Close #19913

Signed-off-by: Andrew Thornton <art27@cantab.net>

Generating repositories from a template is done inside a transaction.

Manual rollback on error is not needed and it always results in error

"repository does not exist".

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

This bug affects tests which are sending emails (#20307). Some tests reinitialise the web routes (like `TestNodeinfo`) which messed up the mail templates. There is no reason why the templates should be loaded in the routes method.

* `PROTOCOL`: can be smtp, smtps, smtp+startls, smtp+unix, sendmail, dummy

* `SMTP_ADDR`: domain for SMTP, or path to unix socket

* `SMTP_PORT`: port for SMTP; defaults to 25 for `smtp`, 465 for `smtps`, and 587 for `smtp+startls`

* `ENABLE_HELO`, `HELO_HOSTNAME`: reverse `DISABLE_HELO` to `ENABLE_HELO`; default to false + system hostname

* `FORCE_TRUST_SERVER_CERT`: replace the unclear `SKIP_VERIFY`

* `CLIENT_CERT_FILE`, `CLIENT_KEY_FILE`, `USE_CLIENT_CERT`: clarify client certificates here

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

- Add a new push mirror to specific repository

- Sync now ( send all the changes to the configured push mirrors )

- Get list of all push mirrors of a repository

- Get a push mirror by ID

- Delete push mirror by ID

Signed-off-by: Mohamed Sekour <mohamed.sekour@exfo.com>

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: zeripath <art27@cantab.net>

* Add latest commit's SHA to content response

- When requesting the contents of a filepath, add the latest commit's

SHA to the requested file.

- Resolves #12840

* Add swagger

* Fix NPE

* Fix tests

* Hook into LastCommitCache

* Move AddLastCommitCache to a common nogogit and gogit file

Signed-off-by: Andrew Thornton <art27@cantab.net>

* Prevent NPE

Co-authored-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

There is a subtle bug in the code relating to collating the results of

`git ls-files -u -z` in `unmergedFiles()`. The code here makes the

mistake of assuming that every unmerged file will always have a stage 1

conflict, and this results in conflicts that occur in stage 3 only being

dropped.

This PR simply adjusts this code to ensure that any empty unmergedFile

will always be passed down the channel.

The PR also adds a lot of Trace commands to attempt to help find future

bugs in this code.

Fix #19527

Signed-off-by: Andrew Thornton <art27@cantab.net>

Sometimes users want to receive email notifications of messages they create or reply to,

Added an option to personal preferences to allow users to choose

Closes #20149

The LastCommitCache code is a little complex and there is unnecessary

duplication between the gogit and nogogit variants.

This PR adds the LastCommitCache as a field to the git.Repository and

pre-creates it in the ReferencesGit helpers etc. There has been some

simplification and unification of the variant code.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Use Unicode placeholders to replace HTML tags and HTML entities first, then do diff, then recover the HTML tags and HTML entities. Now the code diff with highlight has stable behavior, and won't emit broken tags.