This PR fixes two problems. One is when filter repository issues, only

repository level projects are listed. Another is if you list open

issues, only open projects will be displayed in filter options and if

you list closed issues, only closed projects will be displayed in filter

options.

In this PR, both repository level and org/user level projects will be

displayed in filter, and both open and closed projects will be listed as

filter items.

---------

Co-authored-by: John Olheiser <john.olheiser@gmail.com>

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: delvh <dev.lh@web.de>

Every user can already disable the filter manually, so the explicit

setting is absolutely useless and only complicates the logic.

Previously, there was also unexpected behavior when multiple query

parameters were present.

---------

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Most of the time forks are used for contributing code only, so not

having

issues, projects, release and packages is a better default for such

cases.

They can still be enabled in the settings.

A new option `DEFAULT_FORK_REPO_UNITS` is added to configure the default

units on forks.

Also add missing `repo.packages` unit to documentation.

code by: @brechtvl

## ⚠️ BREAKING ⚠️

When forking a repository, the fork will now have issues, projects,

releases, packages and wiki disabled. These can be enabled in the

repository settings afterwards. To change back to the previous default

behavior, configure `DEFAULT_FORK_REPO_UNITS` to be the same value as

`DEFAULT_REPO_UNITS`.

Co-authored-by: Brecht Van Lommel <brecht@blender.org>

Our trace logging is far from perfect and is difficult to follow.

This PR:

* Add trace logging for process manager add and remove.

* Fixes an errant read file for git refs in getMergeCommit

* Brings in the pullrequest `String` and `ColorFormat` methods

introduced in #22568

* Adds a lot more logging in to testPR etc.

Ref #22578

---------

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

Fix #21994.

And fix #19470.

While generating new repo from a template, it does something like

"commit to git repo, re-fetch repo model from DB, and update default

branch if it's empty".

19d5b2f922/modules/repository/generate.go (L241-L253)

Unfortunately, when load repo from DB, the default branch will be set to

`setting.Repository.DefaultBranch` if it's empty:

19d5b2f922/models/repo/repo.go (L228-L233)

I believe it's a very old temporary patch but has been kept for many

years, see:

[2d2d85bb](https://github.com/go-gitea/gitea/commit/2d2d85bb#diff-1851799b06733db4df3ec74385c1e8850ee5aedee70b8b55366910d22725eea8)

I know it's a risk to delete it, may lead to potential behavioral

changes, but we cannot keep the outdated `FIXME` forever. On the other

hand, an empty `DefaultBranch` does make sense: an empty repo doesn't

have one conceptually (actually, Gitea will still set it to

`setting.Repository.DefaultBranch` to make it safer).

The error reported when a user passes a private ssh key as their ssh

public key is not very nice.

This PR improves this slightly.

Ref #22693

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: delvh <dev.lh@web.de>

- Currently the function `GetUsersWhoCanCreateOrgRepo` uses a query that

is able to have duplicated users in the result, this is can happen under

the condition that a user is in team that either is the owner team or

has permission to create organization repositories.

- Add test code to simulate the above condition for user 3,

[`TestGetUsersWhoCanCreateOrgRepo`](a1fcb1cfb8/models/organization/org_test.go (L435))

is the test function that tests for this.

- The fix is quite trivial use a map keyed by user id in order to drop

duplicates.

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Currently only a single project like milestone, not multiple like

labels.

Implements #14298

Code by @brechtvl

---------

Co-authored-by: Brecht Van Lommel <brecht@blender.org>

Instead of re-creating, these should use the available `Link` methods

from the "parent" of the project, which also take sub-urls into account.

Signed-off-by: jolheiser <john.olheiser@gmail.com>

This commit adds support for specifying comment types when importing

with `gitea restore-repo`. It makes it possible to import issue changes,

such as "title changed" or "assigned user changed".

An earlier version of this pull request was made by Matti Ranta, in

https://future.projects.blender.org/blender-migration/gitea-bf/pulls/3

There are two changes with regard to Matti's original code:

1. The comment type was an `int64` in Matti's code, and is now using a

string. This makes it possible to use `comment_type: title`, which is

more reliable and future-proof than an index into an internal list in

the Gitea Go code.

2. Matti's code also had support for including labels, but in a way that

would require knowing the database ID of the labels before the import

even starts, which is impossible. This can be solved by using label

names instead of IDs; for simplicity I I left that out of this PR.

When importing a repository via `gitea restore-repo`, external users

will get remapped to an admin user. This admin user is obtained via

`users.GetAdminUser()`, which unfortunately picks a more-or-less random

admin to return.

This makes it hard to predict which admin user will get assigned. This

patch orders the admin by ascending ID before choosing the first one,

i.e. it picks the admin with the lowest ID.

Even though it would be nicer to have full control over which user is

chosen, this at least gives us a predictable result.

This PR adds the support for scopes of access tokens, mimicking the

design of GitHub OAuth scopes.

The changes of the core logic are in `models/auth` that `AccessToken`

struct will have a `Scope` field. The normalized (no duplication of

scope), comma-separated scope string will be stored in `access_token`

table in the database.

In `services/auth`, the scope will be stored in context, which will be

used by `reqToken` middleware in API calls. Only OAuth2 tokens will have

granular token scopes, while others like BasicAuth will default to scope

`all`.

A large amount of work happens in `routers/api/v1/api.go` and the

corresponding `tests/integration` tests, that is adding necessary scopes

to each of the API calls as they fit.

- [x] Add `Scope` field to `AccessToken`

- [x] Add access control to all API endpoints

- [x] Update frontend & backend for when creating tokens

- [x] Add a database migration for `scope` column (enable 'all' access

to past tokens)

I'm aiming to complete it before Gitea 1.19 release.

Fixes #4300

This PR adds a task to the cron service to allow garbage collection of

LFS meta objects. As repositories may have a large number of

LFSMetaObjects, an updated column is added to this table and it is used

to perform a generational GC to attempt to reduce the amount of work.

(There may need to be a bit more work here but this is probably enough

for the moment.)

Fix #7045

Signed-off-by: Andrew Thornton <art27@cantab.net>

There is a mistake in the code for SearchRepositoryCondition where it

tests topics as a string. This is incorrect for postgres where topics is

cast and stored as json. topics needs to be cast to text for this to

work. (For some reason JSON_ARRAY_LENGTH does not work, so I have taken

the simplest solution of casting to text and doing a string comparison.)

Ref https://github.com/go-gitea/gitea/pull/21962#issuecomment-1379584057

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: delvh <dev.lh@web.de>

This PR introduce glob match for protected branch name. The separator is

`/` and you can use `*` matching non-separator chars and use `**` across

separator.

It also supports input an exist or non-exist branch name as matching

condition and branch name condition has high priority than glob rule.

Should fix #2529 and #15705

screenshots

<img width="1160" alt="image"

src="https://user-images.githubusercontent.com/81045/205651179-ebb5492a-4ade-4bb4-a13c-965e8c927063.png">

Co-authored-by: zeripath <art27@cantab.net>

closes #13585

fixes #9067

fixes #2386

ref #6226

ref #6219

fixes #745

This PR adds support to process incoming emails to perform actions.

Currently I added handling of replies and unsubscribing from

issues/pulls. In contrast to #13585 the IMAP IDLE command is used

instead of polling which results (in my opinion 😉) in cleaner code.

Procedure:

- When sending an issue/pull reply email, a token is generated which is

present in the Reply-To and References header.

- IMAP IDLE waits until a new email arrives

- The token tells which action should be performed

A possible signature and/or reply gets stripped from the content.

I added a new service to the drone pipeline to test the receiving of

incoming mails. If we keep this in, we may test our outgoing emails too

in future.

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Fix #22386

`GetDirectorySize` moved as `getDirectorySize` because it becomes a

special function which should not be put in `util`.

Co-authored-by: Jason Song <i@wolfogre.com>

- Move the file `compare.go` and `slice.go` to `slice.go`.

- Fix `ExistsInSlice`, it's buggy

- It uses `sort.Search`, so it assumes that the input slice is sorted.

- It passes `func(i int) bool { return slice[i] == target })` to

`sort.Search`, that's incorrect, check the doc of `sort.Search`.

- Conbine `IsInt64InSlice(int64, []int64)` and `ExistsInSlice(string,

[]string)` to `SliceContains[T]([]T, T)`.

- Conbine `IsSliceInt64Eq([]int64, []int64)` and `IsEqualSlice([]string,

[]string)` to `SliceSortedEqual[T]([]T, T)`.

- Add `SliceEqual[T]([]T, T)` as a distinction from

`SliceSortedEqual[T]([]T, T)`.

- Redesign `RemoveIDFromList([]int64, int64) ([]int64, bool)` to

`SliceRemoveAll[T]([]T, T) []T`.

- Add `SliceContainsFunc[T]([]T, func(T) bool)` and

`SliceRemoveAllFunc[T]([]T, func(T) bool)` for general use.

- Add comments to explain why not `golang.org/x/exp/slices`.

- Add unit tests.

Related to #22362.

I overlooked that there's always `committer.Close()`, like:

```go

ctx, committer, err := db.TxContext(db.DefaultContext)

if err != nil {

return nil

}

defer committer.Close()

// ...

if err != nil {

return nil

}

// ...

return committer.Commit()

```

So the `Close` of `halfCommitter` should ignore `commit and close`, it's

not a rollback.

See: [Why `halfCommitter` and `WithTx` should rollback IMMEDIATELY or

commit

LATER](https://github.com/go-gitea/gitea/pull/22366#issuecomment-1374778612).

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

After #22362, we can feel free to use transactions without

`db.DefaultContext`.

And there are still lots of models using `db.DefaultContext`, I think we

should refactor them carefully and one by one.

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Unfortunately, #22295 introduced a bug that when set a cached system

setting, it will not affect.

This PR make sure to remove the cache key when updating a system

setting.

Fix #22332

Fix #22281

In #21621 , `Get[V]` and `Set[V]` has been introduced, so that cache

value will be `*Setting`. For memory cache it's OK. But for redis cache,

it can only store `string` for the current implementation. This PR

revert some of changes of that and just store or return a `string` for

system setting.

Previously, there was an `import services/webhooks` inside

`modules/notification/webhook`.

This import was removed (after fighting against many import cycles).

Additionally, `modules/notification/webhook` was moved to

`modules/webhook`,

and a few structs/constants were extracted from `models/webhooks` to

`modules/webhook`.

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

#18058 made a mistake. The disableGravatar's default value depends on

`OfflineMode`. If it's `true`, then `disableGravatar` is true, otherwise

it's `false`. But not opposite.

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

If user has reached the maximum limit of repositories:

- Before

- disallow create

- allow fork without limit

- This patch:

- disallow create

- disallow fork

- Add option `ALLOW_FORK_WITHOUT_MAXIMUM_LIMIT` (Default **true**) :

enable this allow user fork repositories without maximum number limit

fixed https://github.com/go-gitea/gitea/issues/21847

Signed-off-by: Xinyu Zhou <i@sourcehut.net>

Some dbs require that all tables have primary keys, see

- #16802

- #21086

We can add a test to keep it from being broken again.

Edit:

~Added missing primary key for `ForeignReference`~ Dropped the

`ForeignReference` table to satisfy the check, so it closes #21086.

More context can be found in comments.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: zeripath <art27@cantab.net>

The recent PR adding orphaned checks to the LFS storage is not

sufficient to completely GC LFS, as it is possible for LFSMetaObjects to

remain associated with repos but still need to be garbage collected.

Imagine a situation where a branch is uploaded containing LFS files but

that branch is later completely deleted. The LFSMetaObjects will remain

associated with the Repository but the Repository will no longer contain

any pointers to the object.

This PR adds a second doctor command to perform a full GC.

Signed-off-by: Andrew Thornton <art27@cantab.net>

depends on #22094

Fixes https://codeberg.org/forgejo/forgejo/issues/77

The old logic did not consider `is_internal`.

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

Close #14601

Fix #3690

Revive of #14601.

Updated to current code, cleanup and added more read/write checks.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Signed-off-by: Andre Bruch <ab@andrebruch.com>

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: Norwin <git@nroo.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Fix #22023

I've changed how the percentages for the language statistics are rounded

because they did not always add up to 100%

Now it's done with the largest remainder method, which makes sure that

total is 100%

Co-authored-by: Lauris BH <lauris@nix.lv>

When deleting a closed issue, we should update both `NumIssues`and

`NumClosedIssues`, or `NumOpenIssues`(`= NumIssues -NumClosedIssues`)

will be wrong. It's the same for pull requests.

Releated to #21557.

Alse fixed two harmless problems:

- The SQL to check issue/PR total numbers is wrong, that means it will

update the numbers even if they are correct.

- Replace legacy `num_issues = num_issues + 1` operations with

`UpdateRepoIssueNumbers`.

When getting tracked times out of the db and loading their attributes

handle not exist errors in a nicer way. (Also prevent an NPE.)

Fix #22006

Signed-off-by: Andrew Thornton <art27@cantab.net>

`hex.EncodeToString` has better performance than `fmt.Sprintf("%x",

[]byte)`, we should use it as much as possible.

I'm not an extreme fan of performance, so I think there are some

exceptions:

- `fmt.Sprintf("%x", func(...)[N]byte())`

- We can't slice the function return value directly, and it's not worth

adding lines.

```diff

func A()[20]byte { ... }

- a := fmt.Sprintf("%x", A())

- a := hex.EncodeToString(A()[:]) // invalid

+ tmp := A()

+ a := hex.EncodeToString(tmp[:])

```

- `fmt.Sprintf("%X", []byte)`

- `strings.ToUpper(hex.EncodeToString(bytes))` has even worse

performance.

Change all license headers to comply with REUSE specification.

Fix #16132

Co-authored-by: flynnnnnnnnnn <flynnnnnnnnnn@github>

Co-authored-by: John Olheiser <john.olheiser@gmail.com>

Committer avatar rendered by `func AvatarByEmail` are not vertical align

as `func Avatar` does.

- Replace literals `ui avatar` and `ui avatar vm` with the constant

`DefaultAvatarClass`

When re-retrieving hook tasks from the DB double check if they have not

been delivered in the meantime. Further ensure that tasks are marked as

delivered when they are being delivered.

In addition:

* Improve the error reporting and make sure that the webhook task

population script runs in a separate goroutine.

* Only get hook task IDs out of the DB instead of the whole task when

repopulating the queue

* When repopulating the queue make the DB request paged

Ref #17940

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Unfortunately #21549 changed the name of Testcases without changing

their associated fixture directories.

Fix #21854

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

This PR adds a context parameter to a bunch of methods. Some helper

`xxxCtx()` methods got replaced with the normal name now.

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

- It's possible that the `user_redirect` table contains a user id that

no longer exists.

- Delete a user redirect upon deleting the user.

- Add a check for these dangling user redirects to check-db-consistency.

The doctor check `storages` currently only checks the attachment

storage. This PR adds some basic garbage collection functionality for

the other types of storage.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Fix #19513

This PR introduce a new db method `InTransaction(context.Context)`,

and also builtin check on `db.TxContext` and `db.WithTx`.

There is also a new method `db.AutoTx` has been introduced but could be used by other PRs.

`WithTx` will always open a new transaction, if a transaction exist in context, return an error.

`AutoTx` will try to open a new transaction if no transaction exist in context.

That means it will always enter a transaction if there is no error.

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: 6543 <6543@obermui.de>

Related #20471

This PR adds global quota limits for the package registry. Settings for

individual users/orgs can be added in a seperate PR using the settings

table.

Co-authored-by: Lauris BH <lauris@nix.lv>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Close https://github.com/go-gitea/gitea/issues/21640

Before: Gitea can create users like ".xxx" or "x..y", which is not

ideal, it's already a consensus that dot filenames have special

meanings, and `a..b` is a confusing name when doing cross repo compare.

After: stricter

Co-authored-by: Jason Song <i@wolfogre.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: delvh <dev.lh@web.de>

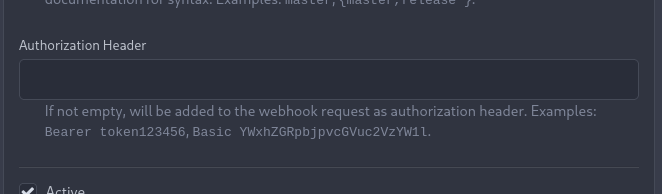

_This is a different approach to #20267, I took the liberty of adapting

some parts, see below_

## Context

In some cases, a weebhook endpoint requires some kind of authentication.

The usual way is by sending a static `Authorization` header, with a

given token. For instance:

- Matrix expects a `Bearer <token>` (already implemented, by storing the

header cleartext in the metadata - which is buggy on retry #19872)

- TeamCity #18667

- Gitea instances #20267

- SourceHut https://man.sr.ht/graphql.md#authentication-strategies (this

is my actual personal need :)

## Proposed solution

Add a dedicated encrypt column to the webhook table (instead of storing

it as meta as proposed in #20267), so that it gets available for all

present and future hook types (especially the custom ones #19307).

This would also solve the buggy matrix retry #19872.

As a first step, I would recommend focusing on the backend logic and

improve the frontend at a later stage. For now the UI is a simple

`Authorization` field (which could be later customized with `Bearer` and

`Basic` switches):

The header name is hard-coded, since I couldn't fine any usecase

justifying otherwise.

## Questions

- What do you think of this approach? @justusbunsi @Gusted @silverwind

- ~~How are the migrations generated? Do I have to manually create a new

file, or is there a command for that?~~

- ~~I started adding it to the API: should I complete it or should I

drop it? (I don't know how much the API is actually used)~~

## Done as well:

- add a migration for the existing matrix webhooks and remove the

`Authorization` logic there

_Closes #19872_

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: Gusted <williamzijl7@hotmail.com>

Co-authored-by: delvh <dev.lh@web.de>

I found myself wondering whether a PR I scheduled for automerge was

actually merged. It was, but I didn't receive a mail notification for it

- that makes sense considering I am the doer and usually don't want to

receive such notifications. But ideally I want to receive a notification

when a PR was merged because I scheduled it for automerge.

This PR implements exactly that.

The implementation works, but I wonder if there's a way to avoid passing

the "This PR was automerged" state down so much. I tried solving this

via the database (checking if there's an automerge scheduled for this PR

when sending the notification) but that did not work reliably, probably

because sending the notification happens async and the entry might have

already been deleted. My implementation might be the most

straightforward but maybe not the most elegant.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lauris BH <lauris@nix.lv>

Co-authored-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

The OAuth spec [defines two types of

client](https://datatracker.ietf.org/doc/html/rfc6749#section-2.1),

confidential and public. Previously Gitea assumed all clients to be

confidential.

> OAuth defines two client types, based on their ability to authenticate

securely with the authorization server (i.e., ability to

> maintain the confidentiality of their client credentials):

>

> confidential

> Clients capable of maintaining the confidentiality of their

credentials (e.g., client implemented on a secure server with

> restricted access to the client credentials), or capable of secure

client authentication using other means.

>

> **public

> Clients incapable of maintaining the confidentiality of their

credentials (e.g., clients executing on the device used by the resource

owner, such as an installed native application or a web browser-based

application), and incapable of secure client authentication via any

other means.**

>

> The client type designation is based on the authorization server's

definition of secure authentication and its acceptable exposure levels

of client credentials. The authorization server SHOULD NOT make

assumptions about the client type.

https://datatracker.ietf.org/doc/html/rfc8252#section-8.4

> Authorization servers MUST record the client type in the client

registration details in order to identify and process requests

accordingly.

Require PKCE for public clients:

https://datatracker.ietf.org/doc/html/rfc8252#section-8.1

> Authorization servers SHOULD reject authorization requests from native

apps that don't use PKCE by returning an error message

Fixes #21299

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

When actions besides "delete" are performed on issues, the milestone

counter is updated. However, since deleting issues goes through a

different code path, the associated milestone's count wasn't being

updated, resulting in inaccurate counts until another issue in the same

milestone had a non-delete action performed on it.

I verified this change fixes the inaccurate counts using a local docker

build.

Fixes #21254

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

At the moment a repository reference is needed for webhooks. With the

upcoming package PR we need to send webhooks without a repository

reference. For example a package is uploaded to an organization. In

theory this enables the usage of webhooks for future user actions.

This PR removes the repository id from `HookTask` and changes how the

hooks are processed (see `services/webhook/deliver.go`). In a follow up

PR I want to remove the usage of the `UniqueQueue´ and replace it with a

normal queue because there is no reason to be unique.

Co-authored-by: 6543 <6543@obermui.de>

A lot of our code is repeatedly testing if individual errors are

specific types of Not Exist errors. This is repetitative and unnecesary.

`Unwrap() error` provides a common way of labelling an error as a

NotExist error and we can/should use this.

This PR has chosen to use the common `io/fs` errors e.g.

`fs.ErrNotExist` for our errors. This is in some ways not completely

correct as these are not filesystem errors but it seems like a

reasonable thing to do and would allow us to simplify a lot of our code

to `errors.Is(err, fs.ErrNotExist)` instead of

`package.IsErr...NotExist(err)`

I am open to suggestions to use a different base error - perhaps

`models/db.ErrNotExist` if that would be felt to be better.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: delvh <dev.lh@web.de>

depends on #18871

Added some api integration tests to help testing of #18798.

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>