Fix #28843

This PR will bypass the pushUpdateTag to database failure when

syncAllTags. An error log will be recorded.

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

(cherry picked from commit 5ed17d9895bf678374ef5227ca37870c1c170802)

Previously, the repo wiki was hardcoded to use `master` as its branch,

this change makes it possible to use `main` (or something else, governed

by `[repository].DEFAULT_BRANCH`, a setting that already exists and

defaults to `main`).

The way it is done is that a new column is added to the `repository`

table: `wiki_branch`. The migration will make existing repositories

default to `master`, for compatibility's sake, even if they don't have a

Wiki (because it's easier to do that). Newly created repositories will

default to `[repository].DEFAULT_BRANCH` instead.

The Wiki service was updated to use the branch name stored in the

database, and fall back to the default if it is empty.

Old repositories with Wikis using the older `master` branch will have

the option to do a one-time transition to `main`, available via the

repository settings in the "Danger Zone". This option will only be

available for repositories that have the internal wiki enabled, it is

not empty, and the wiki branch is not `[repository].DEFAULT_BRANCH`.

When migrating a repository with a Wiki, Forgejo will use the same

branch name for the wiki as the source repository did. If that's not the

same as the default, the option to normalize it will be available after

the migration's done.

Additionally, the `/api/v1/{owner}/{repo}` endpoint was updated: it will

now include the wiki branch name in `GET` requests, and allow changing

the wiki branch via `PATCH`.

Signed-off-by: Gergely Nagy <forgejo@gergo.csillger.hu>

(cherry picked from commit d87c526d2a313fa45093ab49b78bb30322b33298)

## Purpose

This is a refactor toward building an abstraction over managing git

repositories.

Afterwards, it does not matter anymore if they are stored on the local

disk or somewhere remote.

## What this PR changes

We used `git.OpenRepository` everywhere previously.

Now, we should split them into two distinct functions:

Firstly, there are temporary repositories which do not change:

```go

git.OpenRepository(ctx, diskPath)

```

Gitea managed repositories having a record in the database in the

`repository` table are moved into the new package `gitrepo`:

```go

gitrepo.OpenRepository(ctx, repo_model.Repo)

```

Why is `repo_model.Repository` the second parameter instead of file

path?

Because then we can easily adapt our repository storage strategy.

The repositories can be stored locally, however, they could just as well

be stored on a remote server.

## Further changes in other PRs

- A Git Command wrapper on package `gitrepo` could be created. i.e.

`NewCommand(ctx, repo_model.Repository, commands...)`. `git.RunOpts{Dir:

repo.RepoPath()}`, the directory should be empty before invoking this

method and it can be filled in the function only. #28940

- Remove the `RepoPath()`/`WikiPath()` functions to reduce the

possibility of mistakes.

---------

Co-authored-by: delvh <dev.lh@web.de>

Fix #22066

# Purpose

This PR fix the releases will be deleted when mirror repository sync the

tags.

# The problem

In the previous implementation of #19125. All releases record in

databases of one mirror repository will be deleted before sync.

Ref:

https://github.com/go-gitea/gitea/pull/19125/files#diff-2aa04998a791c30e5a02b49a97c07fcd93d50e8b31640ce2ddb1afeebf605d02R481

# The Pros

This PR introduced a new method which will load all releases from

databases and all tags on git data into memory. And detect which tags

needs to be inserted, which tags need to be updated or deleted. Only

tags releases(IsTag=true) which are not included in git data will be

deleted, only tags which sha1 changed will be updated. So it will not

delete any real releases include drafts.

# The Cons

The drawback is the memory usage will be higher than before if there are

many tags on this repository. This PR defined a special release struct

to reduce columns loaded from database to memory.

The function `GetByBean` has an obvious defect that when the fields are

empty values, it will be ignored. Then users will get a wrong result

which is possibly used to make a security problem.

To avoid the possibility, this PR removed function `GetByBean` and all

references.

And some new generic functions have been introduced to be used.

The recommand usage like below.

```go

// if query an object according id

obj, err := db.GetByID[Object](ctx, id)

// query with other conditions

obj, err := db.Get[Object](ctx, builder.Eq{"a": a, "b":b})

```

This PR adds a new field `RemoteAddress` to both mirror types which

contains the sanitized remote address for easier (database) access to

that information. Will be used in the audit PR if merged.

Related #14180

Related #25233

Related #22639

Close #19786

Related #12763

This PR will change all the branches retrieve method from reading git

data to read database to reduce git read operations.

- [x] Sync git branches information into database when push git data

- [x] Create a new table `Branch`, merge some columns of `DeletedBranch`

into `Branch` table and drop the table `DeletedBranch`.

- [x] Read `Branch` table when visit `code` -> `branch` page

- [x] Read `Branch` table when list branch names in `code` page dropdown

- [x] Read `Branch` table when list git ref compare page

- [x] Provide a button in admin page to manually sync all branches.

- [x] Sync branches if repository is not empty but database branches are

empty when visiting pages with branches list

- [x] Use `commit_time desc` as the default FindBranch order by to keep

consistent as before and deleted branches will be always at the end.

---------

Co-authored-by: Jason Song <i@wolfogre.com>

The INI package has many bugs and quirks, and in fact it is

unmaintained.

This PR is the first step for the INI package refactoring:

* Use Gitea's "config_provider" to provide INI access

* Deprecate the INI package by golangci.yml rule

This PR replaces all string refName as a type `git.RefName` to make the

code more maintainable.

Fix #15367

Replaces #23070

It also fixed a bug that tags are not sync because `git remote --prune

origin` will not remove local tags if remote removed.

We in fact should use `git fetch --prune --tags origin` but not `git

remote update origin` to do the sync.

Some answer from ChatGPT as ref.

> If the git fetch --prune --tags command is not working as expected,

there could be a few reasons why. Here are a few things to check:

>

>Make sure that you have the latest version of Git installed on your

system. You can check the version by running git --version in your

terminal. If you have an outdated version, try updating Git and see if

that resolves the issue.

>

>Check that your Git repository is properly configured to track the

remote repository's tags. You can check this by running git config

--get-all remote.origin.fetch and verifying that it includes

+refs/tags/*:refs/tags/*. If it does not, you can add it by running git

config --add remote.origin.fetch "+refs/tags/*:refs/tags/*".

>

>Verify that the tags you are trying to prune actually exist on the

remote repository. You can do this by running git ls-remote --tags

origin to list all the tags on the remote repository.

>

>Check if any local tags have been created that match the names of tags

on the remote repository. If so, these local tags may be preventing the

git fetch --prune --tags command from working properly. You can delete

local tags using the git tag -d command.

---------

Co-authored-by: delvh <dev.lh@web.de>

To avoid duplicated load of the same data in an HTTP request, we can set

a context cache to do that. i.e. Some pages may load a user from a

database with the same id in different areas on the same page. But the

code is hidden in two different deep logic. How should we share the

user? As a result of this PR, now if both entry functions accept

`context.Context` as the first parameter and we just need to refactor

`GetUserByID` to reuse the user from the context cache. Then it will not

be loaded twice on an HTTP request.

But of course, sometimes we would like to reload an object from the

database, that's why `RemoveContextData` is also exposed.

The core context cache is here. It defines a new context

```go

type cacheContext struct {

ctx context.Context

data map[any]map[any]any

lock sync.RWMutex

}

var cacheContextKey = struct{}{}

func WithCacheContext(ctx context.Context) context.Context {

return context.WithValue(ctx, cacheContextKey, &cacheContext{

ctx: ctx,

data: make(map[any]map[any]any),

})

}

```

Then you can use the below 4 methods to read/write/del the data within

the same context.

```go

func GetContextData(ctx context.Context, tp, key any) any

func SetContextData(ctx context.Context, tp, key, value any)

func RemoveContextData(ctx context.Context, tp, key any)

func GetWithContextCache[T any](ctx context.Context, cacheGroupKey string, cacheTargetID any, f func() (T, error)) (T, error)

```

Then let's take a look at how `system.GetString` implement it.

```go

func GetSetting(ctx context.Context, key string) (string, error) {

return cache.GetWithContextCache(ctx, contextCacheKey, key, func() (string, error) {

return cache.GetString(genSettingCacheKey(key), func() (string, error) {

res, err := GetSettingNoCache(ctx, key)

if err != nil {

return "", err

}

return res.SettingValue, nil

})

})

}

```

First, it will check if context data include the setting object with the

key. If not, it will query from the global cache which may be memory or

a Redis cache. If not, it will get the object from the database. In the

end, if the object gets from the global cache or database, it will be

set into the context cache.

An object stored in the context cache will only be destroyed after the

context disappeared.

Fixes #19555

Test-Instructions:

https://github.com/go-gitea/gitea/pull/21441#issuecomment-1419438000

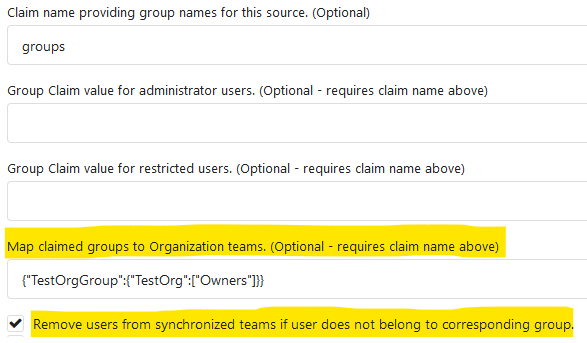

This PR implements the mapping of user groups provided by OIDC providers

to orgs teams in Gitea. The main part is a refactoring of the existing

LDAP code to make it usable from different providers.

Refactorings:

- Moved the router auth code from module to service because of import

cycles

- Changed some model methods to take a `Context` parameter

- Moved the mapping code from LDAP to a common location

I've tested it with Keycloak but other providers should work too. The

JSON mapping format is the same as for LDAP.

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

After #22362, we can feel free to use transactions without

`db.DefaultContext`.

And there are still lots of models using `db.DefaultContext`, I think we

should refactor them carefully and one by one.

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Change all license headers to comply with REUSE specification.

Fix #16132

Co-authored-by: flynnnnnnnnnn <flynnnnnnnnnn@github>

Co-authored-by: John Olheiser <john.olheiser@gmail.com>

This PR adds a context parameter to a bunch of methods. Some helper

`xxxCtx()` methods got replaced with the normal name now.

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Fix #19513

This PR introduce a new db method `InTransaction(context.Context)`,

and also builtin check on `db.TxContext` and `db.WithTx`.

There is also a new method `db.AutoTx` has been introduced but could be used by other PRs.

`WithTx` will always open a new transaction, if a transaction exist in context, return an error.

`AutoTx` will try to open a new transaction if no transaction exist in context.

That means it will always enter a transaction if there is no error.

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: 6543 <6543@obermui.de>

There are far too many error reports regarding timeouts from migrations.

We should adjust error report to suggest increasing this timeout.

Ref #20680

Signed-off-by: Andrew Thornton <art27@cantab.net>

* Move access and repo permission to models/perm/access

* fix test

* Move some git related files into sub package models/git

* Fix build

* fix git test

* move lfs to sub package

* move more git related functions to models/git

* Move functions sequence

* Some improvements per @KN4CK3R and @delvh

* Move some repository related code into sub package

* Move more repository functions out of models

* Fix lint

* Some performance optimization for webhooks and others

* some refactors

* Fix lint

* Fix

* Update modules/repository/delete.go

Co-authored-by: delvh <dev.lh@web.de>

* Fix test

* Merge

* Fix test

* Fix test

* Fix test

* Fix test

Co-authored-by: delvh <dev.lh@web.de>

Follows #19266, #8553, Close #18553, now there are only three `Run..(&RunOpts{})` functions.

* before: `stdout, err := RunInDir(path)`

* now: `stdout, _, err := RunStdString(&git.RunOpts{Dir:path})`

This addresses https://github.com/go-gitea/gitea/issues/18352

It aims to improve performance (and resource use) of the `SyncReleasesWithTags` operation for pull-mirrors.

For large repositories with many tags, `SyncReleasesWithTags` can be a costly operation (taking several minutes to complete). The reason is two-fold:

1. on sync, every upstream repo tag is compared (for changes) against existing local entries in the release table to ensure that they are up-to-date.

2. the procedure for getting _each tag_ involves a series of git operations

```bash

git show-ref --tags -- v8.2.4477

git cat-file -t 29ab6ce9f36660cffaad3c8789e71162e5db5d2f

git cat-file -p 29ab6ce9f36660cffaad3c8789e71162e5db5d2f

git rev-list --count 29ab6ce9f36660cffaad3c8789e71162e5db5d2f

```

of which the `git rev-list --count` can be particularly heavy.

This PR optimizes performance for pull-mirrors. We utilize the fact that a pull-mirror is always identical to its upstream and rebuild the entire release table on every sync and use a batch `git for-each-ref .. refs/tags` call to retrieve all tags in one go.

For large mirror repos, with hundreds of annotated tags, this brings down the duration of the sync operation from several minutes to a few seconds. A few unscientific examples run on my local machine:

- https://github.com/spring-projects/spring-boot (223 tags)

- before: `0m28,673s`

- after: `0m2,244s`

- https://github.com/kubernetes/kubernetes (890 tags)

- before: `8m00s`

- after: `0m8,520s`

- https://github.com/vim/vim (13954 tags)

- before: `14m20,383s`

- after: `0m35,467s`

I added a `foreachref` package which contains a flexible way of specifying which reference fields are of interest (`git-for-each-ref(1)`) and to produce a parser for the expected output. These could be reused in other places where `for-each-ref` is used. I'll add unit tests for those if the overall PR looks promising.

Strangely #19038 appears to relate to an issue whereby a tag appears to

be listed in `git show-ref --tags` but then does not appear when `git

show-ref --tags -- short_name` is called.

As a solution though I propose to stop the second call as it is

unnecessary and only likely to cause problems.

I've also noticed that the tags calls are wildly inefficient and aren't using the common cat-files - so these have been added.

I've also noticed that the git commit-graph is not being written on mirroring - so I've also added writing this to the migration which should improve mirror rendering somewhat.

Fix #19038

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: 6543 <6543@obermui.de>

* Remove `db.DefaultContext` usage in routers, use `ctx` directly

* Use `ctx` directly if there is one, remove some `db.DefaultContext` in `services`

* Use ctx instead of db.DefaultContext for `cmd` and some `modules` packages

* fix incorrect context usage

Make SKIP_TLS_VERIFY apply to git data migrations too through adding the `-c http.sslVerify=false` option to the git clone command.

Fix #18998

Signed-off-by: Andrew Thornton <art27@cantab.net>

Yet another issue has come up where the logging from SyncMirrors does not provide

enough context. This PR adds more context to these logging events.

Related #19038

Signed-off-by: Andrew Thornton <art27@cantab.net>

This PR continues the work in #17125 by progressively ensuring that git

commands run within the request context.

This now means that the if there is a git repo already open in the context it will be used instead of reopening it.

Signed-off-by: Andrew Thornton <art27@cantab.net>

* Some refactors related repository model

* Move more methods out of repository

* Move repository into models/repo

* Fix test

* Fix test

* some improvements

* Remove unnecessary function

Use hostmacher to replace matchlist.

And we introduce a better DialContext to do a full host/IP check, otherwise the attackers can still bypass the allow/block list by a 302 redirection.

* Use a standalone struct name for Organization

* recover unnecessary change

* make the code readable

* Fix template failure

* Fix template failure

* Move HasMemberWithUserID to org

* Fix test

* Remove unnecessary user type check

* Fix test

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

* Ensure that git daemon export ok is created for mirrors

There is an issue with #16508 where it appears that create repo requires that the

repo does not exist. This causes #17241 where an error is reported because of this.

This PR fixes this and also runs update-server-info for mirrors and generated repos.

Fix #17241

Signed-off-by: Andrew Thornton <art27@cantab.net>

* DBContext is just a Context

This PR removes some of the specialness from the DBContext and makes it context

This allows us to simplify the GetEngine code to wrap around any context in future

and means that we can change our loadRepo(e Engine) functions to simply take contexts.

Signed-off-by: Andrew Thornton <art27@cantab.net>

* fix unit tests

Signed-off-by: Andrew Thornton <art27@cantab.net>

* another place that needs to set the initial context

Signed-off-by: Andrew Thornton <art27@cantab.net>

* avoid race

Signed-off-by: Andrew Thornton <art27@cantab.net>

* change attachment error

Signed-off-by: Andrew Thornton <art27@cantab.net>